16th January 2026 : End-to-End Spoken Grammatical Error Correction by Pallavi Singh

16th January 2026 : End-to-End Spoken Grammatical Error Correction by Pallavi Singh

Talk summary:

- Grammatical Error Correction (GEC) and feedback play a vital role in supporting second language (L2) learners, educators, and examiners. While written GEC is well-established, spoken GEC (SGEC), aiming to provide feedback based on learners’ speech, poses additional challenges due to disfluencies, transcription errors, and the lack of structured input. SGEC systems typically follow a cascaded pipeline consisting of Automatic Speech Recognition (ASR), disfluency detection, and GEC, making them vulnerable to error propagation across modules. This work examines an End-to-End (E2E) framework for SGEC and feedback generation, highlighting challenges and possible solutions when developing these systems. Cascaded, partial-cascaded and E2E architectures are compared, all built on the Whisper foundation model. A challenge for E2E systems is the scarcity of GEC labeled spoken data. To address this, an automatic pseudo-labeling framework is examined, increasing the training data from 77 to over 2500 hours. To improve the accuracy of the SGEC system, additional contextual information, exploiting the ASR output, is investigated. Candidate feedback of their mistakes is an essential step to improving performance. In E2E systems the SGEC output must be compared with an estimate of the fluent transcription to obtain the feedback. To improve the precision of this feedback, a novel reference alignment process is proposed that aims to remove hypothesised edits that results from fluent transcription errors. Finally, these approaches are combined with an edit confidence estimation approach, to exclude low-confidence edits. Experiments on the in-house Linguaskill (LNG) corpora and the publicly available Speak&Improve (S&I) corpus show that the proposed approaches significantly boost E2E SGEC performance.

9th January 2026 : Dimensions of Structure and Variability in the Human Vocal Tract: From Manual Measurement to Few-Shot Deep Learning rtMRI Analysis by Chetan Sharma

9th January 2026 : Dimensions of Structure and Variability in the Human Vocal Tract: From Manual Measurement to Few-Shot Deep Learning rtMRI Analysis by Chetan Sharma

Talk summary:

- A defining characteristic of the human vocal tract is the complex interaction between anatomical structure and inter-speaker variability. Across a population, vocal tract dimensions vary substantially, yet variation in one dimension is rarely independent of others, raising the question of whether invariant morphological relationships exist. In this work, we present a data-driven investigation of vocal tract dimensions using multi-speaker real-time magnetic resonance imaging (rtMRI) data, replicating prior findings that reveal distinct sub-populations of speakers who share common patterns of co-variation among vocal tract parameters. In addition to reproducing the original manual measurement framework, we extend this methodology by introducing an automated pipeline based on deep learning–driven Air–Tissue Boundary segmentation using SegNet architecture. The segmentation models are pretrained on multi-speaker rtMRI data and subsequently adapted to new speakers using a small number of annotated frames, enabling reliable extraction of anatomical parameters with minimal manual effort. We show that the automatically derived measurements recover the core structure–variability relationships reported in the original study, demonstrating that large-scale vocal tract morphology analysis can be performed accurately and efficiently using automatic segmentation and few-shot model adaptation.

2nd December 2025 : Implementation of RAG pipeline with government schemes dataset by Kamali Ramesh

2nd December 2025 : Implementation of RAG pipeline with government schemes dataset by Kamali Ramesh

Talk summary:

- To implement RAG pipeline such that it works with dataset of government schemes to assist in form-filling while restricting irrelevant queries and bringing novelty in answering all types of queries and across Hindi, English and Hinglish languages and evaluation of its responses across different metrics

26th December 2025 : “Why Does CTC Become Peaky? An Analysis of Alignment Collapse in End-to-End ASR” by K Venu Reddy

26th December 2025 : “Why Does CTC Become Peaky? An Analysis of Alignment Collapse in End-to-End ASR” by K Venu Reddy

Talk summary:

- Connectionist Temporal Classification (CTC) has become a foundational loss function for end-to-end automatic speech recognition (ASR) due to its ability to learn alignments between variable-length acoustic sequences and label sequences without explicit frame-level supervision. However, a well-known yet under-analyzed phenomenon in CTC-based models is the emergence of peaky posterior distributions, where most non-blank symbols receive high probability mass only at a small number of time steps, while the blank symbol dominates elsewhere. In this talk, we analyze the origins of this peaky behavior from both probabilistic and optimization perspectives. We show how the CTC marginalization over alignments, combined with conditional independence assumptions and softmax normalization, encourages over-confident frame-level predictions. This leads to sharp, spiky alignments that are brittle under noise, limit gradient propagation, and adversely affect downstream decoding and representation learning—especially in low-resource or noisy conditions. We further discuss the practical consequences of peakiness, including poor uncertainty modeling, over-reliance on blanks, and reduced robustness in streaming and multi-task ASR settings. Finally, we review and categorize existing mitigation strategies—such as label smoothing, alignment regularization, auxiliary losses, and hybrid attention-CTC formulations—and provide insights into how these approaches alter the training dynamics to produce smoother and more stable alignments. This analysis aims to deepen understanding of CTC’s training behavior and motivate principled design choices for more robust end-to-end ASR systems.

12th December 2025 : Nested Learning: A new ML paradigm for continual learning by Seshan S

12th December 2025 : Nested Learning: A new ML paradigm for continual learning by Seshan S

Talk summary:

- Over the past decades, improving neural architectures and their training algorithms has been central to machine learning research. Despite advances, particularly in large Language Models, fundamental challenges remain in continual learning, self-improvement, and effective problem-solving. We introduce Nested Learning (NL), a paradigm that models a system as nested, multi-level, and/or parallel optimization problems, each with its own context flow. NL frames existing deep learning methods as compressing their context flow, naturally giving rise to in-context learning, and offers a path toward higher-order in-context and continual learning. We demonstrate NL through three contributions: (1) Expressive Optimizers that extend traditional gradient-based methods with deep memory, (2) a Self-Modifying Learning Module that learns its own update rules, and (3) a Continuum Memory System, generalizing short- and long-term memory. Combining these, our Hope module shows promising results in language modeling, few-shot generalization, continual learning, and long-context reasoning.

12th December 2025 : PINN’s for Vocal Tract acoustic modelling by Atharva Jeevannavar

12th December 2025 : PINN’s for Vocal Tract acoustic modelling by Atharva Jeevannavar

Talk summary:

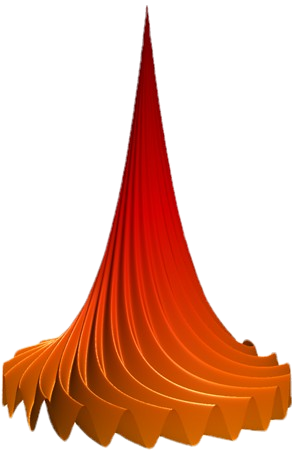

- Traditional mesh-based numerical methods for acoustic modeling are computationally expensive, while standard Physics-Informed Neural Networks (PINNs) often struggle to stably enforce boundary conditions (BCs). This paper presents a robust PINN framework for 1-D acoustic wave propagation that incorporates a trial solution formulation, enforcing BCs by construction to eliminate hyperparameter tuning and stabilize optimization. The framework is developed for both the Helmholtz and Webster’s horn equations, with tailored formulations for Dirichlet and mixed (Robin/impedance) BCs. A key extension to complex-valued fields enables accurate modeling of physically realistic, frequency-dependent impedance. The method is validated on three cases: a uniform duct, a conical frustum, and an MRI-derived human vocal tract geometry, across frequencies from 500 to 2000 Hz. Results show excellent agreement with analytical solutions for all scenarios, including complex radiation effects. The proposed trial-solution PINN thus provides an accurate, mesh-free, and highly stable alternative for solving complex 1-D acoustic problems.

28th November 2025 : Domain-Adversarial Training of Neural Networks by Anshuman Mishra

28th November 2025 : Domain-Adversarial Training of Neural Networks by Anshuman Mishra

Talk summary:

- We introduce a new representation learning approach for domain adaptation, in which data at training and test time come from similar but different distributions. Our approach is directly inspired by the theory on domain adaptation suggesting that, for effective domain transfer to be achieved, predictions must be made based on features that cannot discriminate between the training (source) and test (target) domains. The approach implements this idea in the context of neural network architectures that are trained on labeled data from the source domain and unlabeled data from the target domain (no labeled target-domain data is necessary). As the training progresses, the approach promotes the emergence of features that are (i) discriminative for the main learning task on the source domain and (ii) indiscriminate with respect to the shift between the domains. We show that this adaptation behaviour can be achieved in almost any feed-forward model by augmenting it with few standard layers and a new gradient reversal layer. The resulting augmented architecture can be trained using standard backpropagation and stochastic gradient descent, and can thus be implemented with little effort using any of the deep learning packages. We demonstrate the success of our approach for two distinct classification problems (document sentiment analysis and image classification), where state-of-the-art domain adaptation performance on standard benchmarks is achieved. We also validate the approach for descriptor learning task in the context of person re-identification application.

14th November 2025 : Dynamic Time Warping by Neelapuja Haritha

14th November 2025 : Dynamic Time Warping by Neelapuja Haritha

Talk summary:

- Dynamic Time Warping (DTW) is a foundational algorithm in speech recognition used to align two time-dependent sequences—typically a spoken input and a reference template—by minimizing the cumulative distance between them. It allows for flexible matching even when the sequences differ in length or speed, by warping the time axis to find the optimal alignment path. DTW operates under constraints like monotonicity and continuity, ensuring realistic temporal mapping, and is solved efficiently using dynamic programming. This technique was especially vital in early template-based systems for isolated word recognition.

24th October 2025 : Robust Language Identification using Phonotatics by Shubham Sharma

24th October 2025 : Robust Language Identification using Phonotatics by Shubham Sharma

Talk summary:

- This work presents methods for robust language identification using phoneme-level features and adversarial domain adaptation. The model uses a Conformer architecture trained on phoneme posterior representations. A gradient reversal layer allows it to learn domain-invariant features across datasets and speaking styles. Experiments on Ekstep, IIT Mandi, VAANI, and IndicVoice show that multi-target adversarial training improves recognition accuracy, especially in unseen and cross-domain settings. Single-target adaptation gives mixed results, but multi-target training reduces domain mismatch and boosts generalization. This approach provides an effective way to build LID systems for low-resource, multilingual environments.

17th October 2025 : Lip and Jaw Kinematics in Bilabial Stop Consonant Production by Shreya Karkun

17th October 2025 : Lip and Jaw Kinematics in Bilabial Stop Consonant Production by Shreya Karkun

Talk summary:

- This paper presents two experiments on bilabial stop production. The first examined lip closure using kinematic, air pressure, and contact force data, revealing high lip velocity and mechanical interactions. The second explored lip–jaw coordination, variability, and effects of voicing and vowel context, finding no consistent voicing influence but confirming high-velocity closure. Overall, results support the idea that the lips aim for a negative lip aperture to ensure a tight seal despite contextual variations.

10th October 2025 : Improved Goodness of Pronunciation by Varshadhare K

10th October 2025 : Improved Goodness of Pronunciation by Varshadhare K

Talk summary:

- Goodness of pronunciation (GoP) is typically formulated with Gaussian mixture model-hidden Markov model (GMM-HMM) based acoustic models considering HMM state transition prob abilities (STPs) and GMM likelihoods of context dependent phonemes. On the other hand, deep neural network (DNN) HMMbasedacoustic models employed sub-phonemic (senone) posteriors instead of GMM likelihoods along with STPs. How ever, each senone is shared across many states; thus, there is no one-to-one correspondence between them. In order to cir cumvent this, most of the existing works have proposed modifi cations to the GoP formulation considering only posteriors ne glecting the STPs. In this work, we derive a formulation for the GoPandit results in the formulation involving both senone pos teriors and STPs. Further, we illustrate the steps to implement the proposed GoP formulation in Kaldi, a state-of-the-art au tomatic speech recognition toolkit. Experiments are conducted on English data collected from Indian speakers using acoustic models trained with native English data from LibriSpeech and Fisher-English corpora. The highest improvement in the corre lation coefficient between the scores from the formulations and the expert ratings is found to be 14.89% (relative) better with the proposed approach compared to the best of the existing for mulations that don’t include STPs.

26th September 2025 : Speech Intelligibility Prediction by Amartyaveer

26th September 2025 : Speech Intelligibility Prediction by Amartyaveer

Talk summary:

- Speech intelligibility is a measure of how well a listener can understand spoken language. It is strongly influenced by environmental factors such as noises or transmission through different processing systems, which degrades the clarity of spoken communication in real-world conditions. Conventional approaches typically depend on subjective human listening tests, which are costly, time-intensive, and lack scalability. As a result, objective evaluation techniques have gained attention, as they enable automated predict the intelligibility without human involvement. Among these, intrusive methods require access to clean reference signals, which are rarely available in practical applications. In contrast, non-intrusive methods employ neural network models to estimate quality directly from degraded speech, eliminating the need for reference signals. These techniques are designed to deliver consistent, reliable, and real-time assessments. In this work, we examine STOI algorithm and several non-intrusive approaches and introduce our proposed method, demonstrating its performance across both seen and unseen noisy environments.

19th September 2025 : Intro to Hough Transform by Murali

19th September 2025 : Intro to Hough Transform by Murali

Talk summary:

- The Hough Transform is a fundamental technique in computer vision for detecting geometric shapes like lines and circles in images. It works by transforming image points into a "parameter space" where potential shapes are represented. For lines, each edge point (x,y) in the image contributes votes to all possible lines that could pass through it in a 𝜌-θ parameter space. An accumulator array collects these votes, and strong peaks in this array correspond to the most prominent lines in the original image. This voting mechanism makes the Hough Transform highly robust to noise, gaps, and partial occlusions. While computationally intensive for complex shapes, its reliability and interpretability make it valuable for applications such as lane detection, document analysis, and medical imaging.

12th September 2025 : Summary of PINNs Work by Veerababu

12th September 2025 : Summary of PINNs Work by Veerababu

Talk summary:

- Talk is about the summary of work carried out on PINNs in the SPIRE lab

2nd September 2025 : Speech Time-Scale Modification With GANs by Jayin Khanna

2nd September 2025 : Speech Time-Scale Modification With GANs by Jayin Khanna

Talk summary:

- The aim of Speech Time-Scale Modification (TSM) is to alter the speaking rate of audio while preserving naturalness and intelligibility. Recent approaches such as ScalerGAN have shown promise by learning an unsupervised mapping between original and time-scaled signals, without requiring explicit duration labels. We have successfully reproduced the results of ScalerGAN on the LJSpeech and SPIRE Lab datasets, validating its ability to perform content-agnostic scaling through adversarial spectrum learning and consistency decoding. However, a key limitation of ScalerGAN is that it uniformly stretches or compresses the signal, without regard for phonetic or linguistic content. To address this, our ongoing work explores incorporating content-aware mechanisms inspired by FastSpeech and WaveGlow, specifically leveraging length regulators and duration predictors to replace the interpolator operators within the ScalerGAN architecture. This hybrid framework aims to selectively rescale speech segments based on linguistic content (e.g., vowels vs. stop consonants), thereby improving perceptual quality and naturalness. The goal of this project is to develop a model that bridges the gap between content-agnostic and content-aware TSM, with the potential to advance state-of-the-art methods in flexible and intelligible speech rate modification.

15th August 2025 : Phoneme Classification using CNNs and Mel Spectrograms by Shahanaj khan

15th August 2025 : Phoneme Classification using CNNs and Mel Spectrograms by Shahanaj khan

Talk summary:

- This project uses Cnn to classify phonemes from audio by converting speech into Mel spectrograms. These spectrograms capture time and frequency details, enabling the CNN to learn patterns for distinguishing 12 phoneme classes. The model achieves good accuracy and generalizes well across data splits, showing the effectiveness of deep learning in phoneme recognition tasks.

25th July 2025 : Exploration of VAD Architectures for Short Pause Detection by Abhinith D

25th July 2025 : Exploration of VAD Architectures for Short Pause Detection by Abhinith D

Talk summary:

- Voice Activity Detection (VAD) is a critical component in speech processing systems. In the context of language learning applications, accurately identifying short pauses is essential for providing feedback on user articulation, rhythm, and fluency. This report details the exploration and comparative analysis of three distinct deep learning architectures for this specific task: a Convolutional Neural Network (CNN), a Convolutional Long Short-Term Memory Deep Neural Network (CLDNN), and an attention-based LSTM network. The models were trained and evaluated on a custom dataset of English speech recordings, with performance measured using standard classification metrics including accuracy, precision, recall, and F1-score. This study presents the results of this comparison, highlighting the relative strengths and weaknesses of each architecture. The findings provide crucial insights for selecting a robust and accurate model suitable for integration into our English learning platform.

18th July 2025 : Project Report on Automation of Spirometric Coeffecient Prediction by Bhavya Gaur

18th July 2025 : Project Report on Automation of Spirometric Coeffecient Prediction by Bhavya Gaur

Talk summary:

- Spirometry is a standard but equipment-dependent test. In remote or resource-limited settings, spirometry is inaccessible. Breath sounds carry biomarkers that might correlate with pulmonary function. Goal: Can we predict Spirometric-Values (FEV1, FVC) from audio alone?

18th July 2025 : Design and evaluation of audio annotation and classification pipeline using CNN and Random Forests models by Stuti Vats

18th July 2025 : Design and evaluation of audio annotation and classification pipeline using CNN and Random Forests models by Stuti Vats

Talk summary:

- This project presents a comprehensive pipeline for annotating and classifying spoken words in audio recordings. It integrates a PyQt5-based GUI and Audacity for efficient manual and semi-automated word-level annotation, followed by the development of machine learning models for classification. Convolutional Neural Networks (CNNs) and Random Forest classifiers were trained on three datasets with varying user and word-class distributions. The study compares their performance under different data regimes and analyzes how factors like silence trimming, class imbalance, and speaker variability impact model accuracy. While Random Forests perform better on small, low-class datasets, CNNs demonstrate scalability and are better suited for complex, high-class tasks.

18th July 2025 : Interference in Acoustic Waves: An acoustic-optical analogue to YDSE by Aditya Prakash

18th July 2025 : Interference in Acoustic Waves: An acoustic-optical analogue to YDSE by Aditya Prakash

Talk summary:

- This experiment aims to verify the Young’s Double Slit Experiment (YDSE) using an acoustic and signal-processing approach. Instead of conventional slits, two speakers are positioned behind a thin reflective film to create controlled acoustic disturbances. A laser beam is directed onto the reflective film, and the reflected light is detected by a pho- toresistor. As the interference pattern forms on the reflective surface due to the acoustic modulation, variations in light intensity are captured over time by the photoresistor. The recorded signals are then subjected to digital signal processing techniques to extract in- terference characteristics. The presence of constructive and destructive interference in the data confirms the fundamental principles of wave interference as outlined by YDSE, thus validating the experimental setup and providing a novel method of verification.

18th July 2025 : Sensitivity analysis of sentence embedding vector due to position dependent insertion, deletion and substitution errors in Hindi language by Sarth Santosh Shah

18th July 2025 : Sensitivity analysis of sentence embedding vector due to position dependent insertion, deletion and substitution errors in Hindi language by Sarth Santosh Shah

Talk summary:

- Sentence embeddings are critical for enabling semantic understanding across tasks such as translation, paraphrase detection, and cross-lingual information retrieval. Multilingual embedding models like LaBSE (Language-agnostic BERT Sentence Embedding) aim to project sentences from diverse languages—including low-resource ones like Hindi—into a shared semantic space. While LaBSE achieves strong performance on clean benchmark datasets, its robustness to real-world noise remains underexplored, particularly in morphologically rich and syntactically flexible languages. In this study, we systematically evaluate how LaBSE\'s Hindi embeddings respond to various types of synthetic noise, including word insertions, deletions, substitutions, reordering, and character-level corruption. Through cosine similarity analysis and embedding-space visualizations, we quantify semantic drift under distortion and assess the model’s resilience. Our findings reveal both vulnerabilities and strengths, offering practical guidance for improving embedding robustness in noisy multilingual settings.

18th July 2025 : Sensitivity analysis of sentence embedding vector due to position dependent insertion, deletion and substitution errors in telugu language by SNEHITH KUMAR MATTE

18th July 2025 : Sensitivity analysis of sentence embedding vector due to position dependent insertion, deletion and substitution errors in telugu language by SNEHITH KUMAR MATTE

Talk summary:

- Multilingual models like LaBSE map sentences from different languages—including low-resource ones like Telugu—into a shared meaning space. While effective on clean data, LaBSE’s robustness to noise is unclear. This study tests how Telugu embeddings respond to synthetic noise (e.g., word edits, character corruption). Using cosine similarity and visualizations, we measure semantic drift and highlight LaBSE’s strengths and weaknesses, offering insights to improve its performance in noisy settings.

18th July 2025 : ATB-Contour Based Speaker Segmentation by Guhan Balaji

18th July 2025 : ATB-Contour Based Speaker Segmentation by Guhan Balaji

Talk summary:

- This study presents a frame-wise speaker classification framework using Air-Tissue Boundary (ATB) contours derived from real-time MRI videos of the vocal tract. Each video frame\'s contours are treated as an independent sample, enabling the analysis to focus solely on static anatomical configurations. The approach aims to classify speakers based on distinctive morphological features encoded in these static contours and further identifies which local regions or segments in the contours hold maximal speaker-discriminative potential. This methodology highlights the utility of structural vocal tract cues, independent of temporal information, for robust biometric speaker identification and provides new insights into the anatomical correlates of speaker individuality

18th July 2025 : Residual Based Adaptive Refinement for PINN's by Gautam Sivakumar

18th July 2025 : Residual Based Adaptive Refinement for PINN's by Gautam Sivakumar

Talk summary:

- Residual‐based adaptive refinement (RAR) for PINNs iteratively swaps collocation points toward regions of highest PDE residual, concentrating training effort where the model performs worse. This simple, dynamic resampling sharply accelerates convergence and cuts the number of epochs and points needed compared with uniform sampling.

11th July 2025 : A Generative Unsupervised Approach to Voice Activity Detection via Threshold Variance by Aditya Pandey

11th July 2025 : A Generative Unsupervised Approach to Voice Activity Detection via Threshold Variance by Aditya Pandey

Talk summary:

- This study proposes an unsupervised voice activity detection (VAD) framework based on a generative U‑Net that jointly optimizes reconstruction loss and a novel multi‑threshold dissimilarity loss. The latter leverages multiple weak VAD estimators—each defined by soft thresholding at mean ± τ·σ with τ∈{0.05,0.10,0.15}—and measures output divergence via polynomial xor approximation. A U‑Net with 16 base features is trained for 100 epochs, decaying the reconstruction‑to‑dissimilarity weight α from 1 to 0.5, and ablation studies show that increasing model capacity and lowering α’s floor further tighten speech/non‑speech clustering. Embedding the pre‑trained latent space into a 50/50 semi‑supervised schedule yields consistent gains (Test ACC 96.6%, F1 97.0%) over both fully supervised (ACC 95.6%, F1 96.5%) and semi‑supervised baselines without custom loss.

11th July 2025 : Vowel consonant vowel sequence classification based on air tissue boundary segmentation by Kisalay Srivastav

11th July 2025 : Vowel consonant vowel sequence classification based on air tissue boundary segmentation by Kisalay Srivastav

Talk summary:

- This work presents an automated system for classifying Vowel-Consonant-Vowel (VCV) speech sequences using features derived from Air-Tissue Boundary (ATB) segmentation in real-time Magnetic Resonance Imaging (rtMRI) data. The study utilizes the USC-TIMIT corpus, comprising rtMRI videos and synchronized audio from 75 speakers, focusing on 40 subjects for detailed analysis. Each VCV sequence is represented by seven frames, with anatomical landmarks and contours extracted per frame.

3rd July 2025 : Final Presentation of SRFP 25 by Marita Jimmy

3rd July 2025 : Final Presentation of SRFP 25 by Marita Jimmy

Talk summary:

- A summary of my internship at spire labs. It is the evaluation of effect of silence on intelligibility of speech using Short Time Objective Intelligibility (STOI)

4th July 2025 : Accelerating RT-MRI Annotation Through Similarity-Based Contour Matching by Adarsh V V

4th July 2025 : Accelerating RT-MRI Annotation Through Similarity-Based Contour Matching by Adarsh V V

Talk summary:

- This talk presents a semi-automated method for segmenting real-time MRI (RT-MRI) data of speech production. The approach leverages structural similarity (SSIM) to identify visually similar annotated frames and transfers their anatomical contours to unannotated targets. To assess accuracy, Average Hausdorff Distance (AHD) is used to compare the predicted contours against ground truth, revealing a strong inverse correlation between SSIM and AHD across thousands of frame pairs. The method achieves consistently low contour error while reducing manual annotation time by over 80%, offering a scalable and interpretable framework for articulatory segmentation in RT-MRI studies.

13th June 2025 : Pitch Annotation using ToBI And AuToBI by Mrinal Naveen

13th June 2025 : Pitch Annotation using ToBI And AuToBI by Mrinal Naveen

Talk summary:

- Pitch annotation helps analyze how speech sounds rise and fall, affecting meaning and emotion. ToBI is a system used to label these patterns, but it’s slow and needs expert input. AuToBI and its Python version AuToBI_py automate this process, making pitch and prosody annotation faster, scalable, and useful for speech analysis tasks like emotion detection and conversational AI.

30th May 2025 : Speech-Based ALS Classification Using CNN-LSTM, leveraging Wav2Vec2.0 Features by Deepthi S B

30th May 2025 : Speech-Based ALS Classification Using CNN-LSTM, leveraging Wav2Vec2.0 Features by Deepthi S B

Talk summary:

- This study explores how to further pretraining the Wav2Vec 2.0 speech model and using that pretrained model as a feature extractor for classification task to tell the difference between healthy voices and those affected by ALS. It uses large audio datasets recorded on different devices. The process includes preparing the audio, creating manifest, and training the model using Fairseq. To check how well the model works, it uses advanced techniques to extract features from the audio and a deep learning method (CNN-LSTM) to classify the voices. The goal is to help with reliable speech analysis and tracking ALS progression

28th March 2025 : LIMMITS’25: Multilingual Streaming TTS With Neural Codecs for Indian Languages by Jesuraj

28th March 2025 : LIMMITS’25: Multilingual Streaming TTS With Neural Codecs for Indian Languages by Jesuraj

Talk summary:

- This work provides a summary of the Multilingual streaming TTS with neural codecs for Indian languages challenge (LIMMITS’25), organized as part of the ICASSP 2025 signal processing grand challenge. Towards this, 278 hours of TTS data in 4 Indian languages Gujarati, Indian English, Bhojpuri, and Kannada got released. The challenge focuses on advancing research in neural codec-based and streaming TTS systems. The top teams in the challenge attained high subjective scores on naturalness and similarity, thus contributing to the progress in text-to-speech generation systems.

28th March 2025 : Improving Dialect Identification in Indian Languages Using Multimodal Features from Dialect Informed ASR by Saurabh Kumar

28th March 2025 : Improving Dialect Identification in Indian Languages Using Multimodal Features from Dialect Informed ASR by Saurabh Kumar

Talk summary:

- Dialect identification (DID) addresses the challenge of recognizing regional variations within a language. The current deep learning approaches focus on audio-only, text-only, or multi-task setups combining automatic speech recognition (ASR) with DID. This work introduces a novel multimodal architecture that leverages speech and text features to enhance DID performance. Our method integrates ASR-generated speech representations with text embeddings derived from ASR hypotheses using a RoBERTa-based encoder. Additionally, we perform a layer-wise analysis of the IndicWav2Vec model to identify the layers most effective for extracting dialectal features. We evaluate our approach on a subset of the RESPIN dataset featuring eight Indian languages and 33 dialects. Experimental results show that our proposed multimodal DID system achieves an average DID accuracy of 79.81%, consistently outperforming baseline methods. This study is the first to analyse comprehensively DID in Indian languages, providing new insights into their dialectal diversity.

28th March 2025 : Physics-Informed Neural Networks for Predicting Acoustic Pressure Inside Ducts by Akanksha Singh

28th March 2025 : Physics-Informed Neural Networks for Predicting Acoustic Pressure Inside Ducts by Akanksha Singh

Talk summary:

- We present a novel demonstration of physics-informed neural networks (PINNs) for accurately predicting acoustic pressure fields in ducts without any reliance on ground-truth measurement data. By embedding the Helmholtz equation directly into a neural network training process, we showcase how knowledge of the physical properties of the duct—such as boundary conditions and material parameters—alone suffices to generate robust acoustic pressure predictions. Our method eliminates the need for experimentally recorded datasets, dramatically reducing costs and logistical complexity. In this demonstration, we train small-scale networks over just a few iterations for a range of frequencies. The results highlight the remarkable convergence and accuracy that can be achieved solely through the governing equation and domain specifications, even with minimal computational overhead. We further extend this approach to more complex duct geometries, including shapes that are varying in the length direction such as in vocal tracts. This enables direct modeling of acoustic wave propagation within anatomically relevant structures—a powerful tool for speech science and related audio processing applications. Attendees will be able to see how rapidly and effectively our PINN-based models adapt to different duct configurations, revealing the strong potential for future real-world uses. Our interactive session features step-by-step explanations of the training process, a display of the network configurations, and visualizations of the resulting pressure fields. Participants will gain firsthand insight into how a physics-based loss function can guide neural networks to learn the underlying physics without requiring extensive labeled data.

28th March 2025 : Role of the Pretraining and the Adaptation data sizes for low-resource real-time MRI video segmentation by Vinayaka Hegde

28th March 2025 : Role of the Pretraining and the Adaptation data sizes for low-resource real-time MRI video segmentation by Vinayaka Hegde

Talk summary:

- Real-time Magnetic Resonance Imaging (rtMRI) is frequently used in speech production studies as it provides a complete view of the vocal tract during articulation. This study investigates the effectiveness of rtMRI in analyzing vocal tract movements by employing the SegNet and UNet models for Air-Tissue Boundary (ATB) segmentation tasks. We conducted pretraining of a few base models using increasing numbers of subjects and videos, to assess performance on two datasets. First, consisting of unseen subjects with unseen videos from the same data source, achieving 0.33% and 0.91% (Pixel-wise Classification Accuracy (PCA) and Dice Coefficient respectively) better than its matched condition. Second, comprising unseen videos from a new data source, where we obtained an accuracy of 99.63% and 98.09% (PCA and Dice Coefficient respectively) of its matched condition performance. Here, matched condition performance refers to the performance of a model trained only on the test subjects which was set as a benchmark for the other models. Our findings highlight the significance of fine-tuning and adapting models with limited data. Notably, we demonstrated that effective model adaptation can be achieved with as few as 15 rtMRI frames from any new dataset.

20th December 2024 : Denoising Diffusion Probabilistic Models by Jesuraj Bandekar

20th December 2024 : Denoising Diffusion Probabilistic Models by Jesuraj Bandekar

Talk summary:

- We present high quality image synthesis results using diffusion probabilistic models, a class of latent variable models inspired by considerations from nonequilibrium thermodynamics. Our best results are obtained by training on a weighted variational bound designed according to a novel connection between diffusion probabilistic models and denoising score matching with Langevin dynamics, and our models naturally admit a progressive lossy decompression scheme that can be interpreted as a generalization of autoregressive decoding. On the unconditional CIFAR10 dataset, we obtain an Inception score of 9.46 and a state-of-the-art FID score of 3.17. On 256x256 LSUN, we obtain sample quality similar to ProgressiveGAN.

13th December 2024 : Automatic Assessment of Speech Quality by Amartyaveer

13th December 2024 : Automatic Assessment of Speech Quality by Amartyaveer

Talk summary:

- Automatic Assessment of Speech Quality (AASQ) is a critical task in evaluating the intelligibility and overall quality of speech signals, especially in noisy environments or after processing through various systems. Traditional methods often rely on subjective human ratings, which are time-consuming and not scalable. Therefore, Objective Assessment methods are becoming popular which predict speech quality automatically without requiring human input. However, intrusive methods still require clean reference signals, often unavailable in real-world scenarios. In contrast, non-intrusive methods leverage neural networks to predict speech quality without using clean reference signals. These methods aim to provide consistent, reliable, and real-time assessments. We explore various non-intrusive AASQ methods and present our method along with its performance on both seen and unseen data in different noisy conditions.

6th December 2024 : Intro to Bottleneck adapters and LoRa by Murali

6th December 2024 : Intro to Bottleneck adapters and LoRa by Murali

Talk summary:

- Finetuning ASR models using pretrained models have been effective in cases where there is substantial data available. Are there other techniques that employ lesser resources while giving similar performing models? There are many types of adapters that are a work around. This talk would be a very brief introduction to two types of adapters.

29th November 2024 : Applying Physics-Informed Neural Networks to Duct Acoustics by Akanksha Singh

29th November 2024 : Applying Physics-Informed Neural Networks to Duct Acoustics by Akanksha Singh

Talk summary:

- Recent advances in neural network architectures have led to significant breakthroughs across various domains, one of which includes physical modeling using Physics-Informed Neural Networks (PINNs). This presentation delves into the application of PINNs to solve the complex problem of sound wave propagation in uniform ducts. Unlike traditional neural networks that learn solely from data, PINNs incorporate physical laws, in this case, the Helmholtz equation, as part of their loss function, thus adhering to established physical principles. The talk will outline the problem statement of duct acoustics, elucidate the types of boundary conditions relevant to this physical system, and discuss their significance. It will introduce two solutions. First approach using the λ-method (Lagrange Multiplier Method) to enforce boundary conditions, discuss its limitations, and present the second approach called Trial Solution method that seeks to overcome these limitations.

15th November 2024 : Dialect Identification in Indian Languages by Saurabh Kumar

15th November 2024 : Dialect Identification in Indian Languages by Saurabh Kumar

Talk summary:

- Dialect identification (DID) addresses the challenge of recognizing regional variations within a language. The current deep learning approaches focus on audio-only, text-only, or multi-task setups combining automatic speech recognition (ASR) with DID. This work aims to utilize multimodal architecture that leverages speech and text features to enhance DID performance.

20th May 2004 : Gumbel-Softmax Trick by Atharva Jeevannavar

20th May 2004 : Gumbel-Softmax Trick by Atharva Jeevannavar

Talk summary:

- The Gumbel-Softmax Trick is a technique used in machine learning to approximate discrete categorical samples in a differentiable manner, which enables the use of gradient-based optimization methods, like backpropagation. This technique is particularly useful when dealing with categorical data that is inherently non-differentiable, such as in natural language processing or reinforcement learning tasks. By introducing randomness into the logits through Gumbel noise and adjusting the distribution using a temperature parameter, the Gumbel-Softmax trick allows models to generate diverse predictions while maintaining the differentiability required for training neural networks. It addresses challenges in working with discrete variables by reparameterizing them, making it a crucial tool for optimization in models with categorical variables. The Gumbel-Softmax technique has demonstrated effectiveness in tasks such as generative modeling using variational autoencoders (VAEs) and semi-supervised classification on datasets like MNIST. It also improves performance in environments with discrete action spaces, such as reinforcement learning.

9th August 2024 : Website development of English Gyaani Audio Fetching Dashboard by Darsh Kumar

9th August 2024 : Website development of English Gyaani Audio Fetching Dashboard by Darsh Kumar

Talk summary:

- During my internship at IISC Bangalore, I contributed to the English-Gyaani project by developing an audio fetching dashboard using React.js and Firebase. I enhanced my skills in web development, backend integration, and communication, overcoming challenges with Firebase limits and React.js state management through self-learning and online resources.

26th July 2024 : Quantification of co-articulation for alveolar consonant production in VCV using rtMRI data by SHIEKH MAHAMMAD ARZU

26th July 2024 : Quantification of co-articulation for alveolar consonant production in VCV using rtMRI data by SHIEKH MAHAMMAD ARZU

Talk summary:

- In this work, Annotations are carried out for t and d (Alveolar consonants) paired with vowels a, e and u. The Euclidean distance is estimated from point of contact (point where tongue touches the alveolar ridge) of consonant frame with respect to point of contact of remaining frames. Two fixed points tip of nose and projection of velum are considered for normalizing the contours. The results undergo statistical test like T-test and it is observed that there is no significant difference between -3 to +3 similarly for -2 to +2 and -1 to +1 frames. The distance from +1 to +3 is significantly increasing and similarly for -1 to -3 frames. Also, the last two frames (+2 and +3) after consonant frame are statistically the same.

12th July 2024 : Evaluating Machine Translation Models for English-Hindi Language Pairs: A Comparative Analysis by Ahan P Shetty

12th July 2024 : Evaluating Machine Translation Models for English-Hindi Language Pairs: A Comparative Analysis by Ahan P Shetty

Talk summary:

- Machine translation has become a critical tool in bridging linguistic gaps, especially between languages as diverse as English and Hindi. This project comprehensively evaluates various machine translation models for translating between English and Hindi. We assess the performance of these models using a diverse set of automatic evaluation metrics, both lexical and machine learning-based metrics. Our evaluation leverages an 18000+ corpus of English-Hindi parallel dataset and a custom FAQ dataset comprising questions from government websites. The study aims to provide insights into the effectiveness of different machine translation approaches in handling both general and specialized language domains. Results indicate varying performance levels across different metrics, highlighting strengths and areas for improvement in current translation systems.

21st June 2024 : Pause and Speech Pattern Analysis in ALS and Parkinson Disease Affected Patients by Nisha Johnson

21st June 2024 : Pause and Speech Pattern Analysis in ALS and Parkinson Disease Affected Patients by Nisha Johnson

Talk summary:

- This study is significant as it addresses a critical gap in the objective analysis of speech and pause characteristics among normal individuals, ALS patients, and Parkinson\'s patients. By identifying distinct patterns in pause segments and speech segments, the research has the potential to revolutionize diagnostic and monitoring practices for these neurodegenerative diseases. The findings will contribute to the development of precise, non-invasive tools for early detection and tracking disease progression. Moreover, the study\'s evaluation of model accuracy in classifying different groups and determination of pause ratios will enhance our understanding of speech biomarkers, leading to improved clinical interventions and better patient outcomes.

2nd February 2024 : Correction of both APA,AKA files and measure what is the DTW between each of the 74 contours by Lalit Singh Kharayat

2nd February 2024 : Correction of both APA,AKA files and measure what is the DTW between each of the 74 contours by Lalit Singh Kharayat

Talk summary:

- Speech Recognition in Agriculture and Finance for the Poor in India

12th January 2024 : My experience as an intern by A Shanmukha Priya

12th January 2024 : My experience as an intern by A Shanmukha Priya

Talk summary:

- Abstract: Dive into the dynamic realm of AI and ML, witnessing their impact on Automatic Speech Recognition (ASR) and Natural Language Processing (NLP). In my role at Project Vaani, supported by Google, we address the linguistic tapestry of India\'s 780 languages. I share the challenges faced in categorizing 6300 Hindi transcribed files, from API constraints to a Python-based strategy. Leveraging models like IndicBert and the universal sentence encoder, discover our exploration of sentence embeddings and clustering techniques

29th December 2023 : Speech to Speech Translation - An Overview by Saurabh Kumar

29th December 2023 : Speech to Speech Translation - An Overview by Saurabh Kumar

Talk summary:

- In a country like India, where a majority of languages exist primarily in spoken forms, the development of reliable Speech-to-Speech Translation (S2ST) systems becomes crucial. This presentation seeks to offer a concise overview of the latest advancements in S2ST systems and highlight the significant challenges associated with their development for Indian languages.

15th December 2023 : Achieving stable convergence of neural networks for estimating acoustic field in uniform ducts by Ashwin A Raikar

15th December 2023 : Achieving stable convergence of neural networks for estimating acoustic field in uniform ducts by Ashwin A Raikar

Talk summary:

- The ability of the neural networks as function approximators can be exploited to solve several governing differential equations. In this work, 1-D Helmholz equation is solved to predict the acoustic pressure in a uniform duct. Solving the Helmholtz equation across a range of frequencies, especially at higher frequencies is challenging as the loss function destabilizes the training process, thereby preventing it from converging to the true solution with the desired accuracy. To overcome this issue, a dynamic learning rate technique is proposed that helps to stabilize the training process and improve overall accuracy of the network. The efficiency of the method is demonstrated by comparing the results with a static learning rate method and the analytical solutions. A good agreement is observed between the predicted solution with dynamic learning rate and the analytical solution up to 2000 Hz. Without dynamic learning rate, the relative errors are observed tobe 2% and 58% at 500 and 2000 Hz, respectively, whereas they reduced to 0.6% and 0.1%, respectively, with the dynamic learning rate at the same frequencies. The proposed dynamic learning rate method is found to be effective for different types of boundary conditions.

6th October 2023 : Human, Face Detection in Images by Mauli Mehulkumar Patel

6th October 2023 : Human, Face Detection in Images by Mauli Mehulkumar Patel

Talk summary:

- Many of images available on the internet today have people and various other details that can be exploited. The motivation behind detecting recognizable humans and faces in images is to disallow any personal details and making it open source compatible

29th September 2023 : EXPLORING SYLLABLE DISCRIMINABILITY WITH INCREASING DYSARTHRIA SEVERITY FOR PATIENTS WITH AMYOTROPHIC LATERAL SCLEROSIS by Neelesh Samptur and Anirudh Chakravarty

29th September 2023 : EXPLORING SYLLABLE DISCRIMINABILITY WITH INCREASING DYSARTHRIA SEVERITY FOR PATIENTS WITH AMYOTROPHIC LATERAL SCLEROSIS by Neelesh Samptur and Anirudh Chakravarty

Talk summary:

- Dysarthria due to Amyotrophic Lateral Sclerosis (ALS) progres- sively impairs speech production compromising the discriminability among different speech sounds. The form and extent of the com- promise vary with the dysarthria severity level. Though the changes in the discriminability among different vowels and fricatives with increasing severity have been studied in the literature, the effects on syllables are yet unexplored. In this work, we perform manual and automatic classification of three syllables - /pa/, /ta/ and /ka/, at varied severity levels. Manual classification is performed through listening tests, whereas, spectral features and self-supervised speech representations obtained from pretrained models are explored along with different neural network classifiers for the purpose of automatic classification. Experiments with 100 ALS patients and 35 healthy subjects indicate that, though both manual and automatic classifica- tion accuracies decline with increasing severity, automatic methods significantly outperform humans at all severity levels achieving 5.56% and 24.45% higher classification accuracies than humans on utterances from healthy subjects and the most severe patients, respectively. This might indicate that though it becomes perceptu- ally difficult to differentiate the syllables with increasing dysarthria severity, discriminative acoustic cues persist in the utterances which data-driven methods can capture.

22nd September 2023 : Speaker verification for ALS patients by Akash Kaushik

22nd September 2023 : Speaker verification for ALS patients by Akash Kaushik

Talk summary:

- Speaker verification with PLDA and X vector training embeddings for ALS patients.

8th September 2023 : Spontaneous Indian English Speech Corpus by Charu Samir Shah

8th September 2023 : Spontaneous Indian English Speech Corpus by Charu Samir Shah

Talk summary:

- This presentation will be a discussion of the work I have done in the corpus paper. The corpus paper has been submitted to COCOSDA

7th July 2023 : Estimating articulatory movements in speech production using neural networks. by Kirann Mahendran

7th July 2023 : Estimating articulatory movements in speech production using neural networks. by Kirann Mahendran

Talk summary:

- Estimating articulatory movements from speech acoustic features is known as acoustic-to-articulatory inversion (AAI). Large amount of parallel data from speech and articulatory motion is required for training an AAI model. Electromagnetic articulograph (EMA) is a promising technology to record such parallel data. Electromagnetic articulograph (EMA) is a technique for measuring the positions and movements of the articulators, such as the tongue, lips, and jaw, during speech production. EMA data can provide more accurate and detailed information about the articulatory movements than acoustic features alone.

7th April 2023 : Beam Search by Amartyaveer

7th April 2023 : Beam Search by Amartyaveer

Talk summary:

- Beam Search is a greedy algorithm that examines a graph by extending the most promising node in a limited set (beam size) B. It always expands the B number of the best nodes at each level. It progresses level by level and moves downwards only from the best B nodes. Generally used in encoder-decoder models such as machine translation to find the most probable sequence.

7th April 2023 : Language Modelling by Shreya Som

7th April 2023 : Language Modelling by Shreya Som

Talk summary:

- A language model learns the probability of word occurence based on text dataset . This project involves data preprocessing from many webpages and clean it accordingly . Then nltk based n gram model training ,smoothing is done to train the data .Later estimation of how well the model fits the testing data was done by determining perplexity.

24th March 2023 : Internship Completion Talk by Pranayak Uniyal

24th March 2023 : Internship Completion Talk by Pranayak Uniyal

Talk summary:

- Modelling wave equations in Python: the individual has demonstrated proficiency in reading and understanding MATLAB code, summarizing research articles, and implementing MATLAB code in Python. They also possess knowledge of the multilayer perceptron architecture and can create a function model that computes the outputs of the deep learning model. Analytical problem-solving: the individual has demonstrated the ability to solve second-order differential equations using complementary and particular solutions and understand how to find analytical solutions to problems involving nonlinear partial differential equations. Coding and plotting solutions: the individual can implement analytical solutions of ODE equations using Python, use the bvp Python library function to solve boundary value problems, and use the matplotlib library for data representation and plotting the solutions of wave equations. Understanding COMSOL Physics View software: the individual has demonstrated proficiency in using the software to solve physics-based problems, including the absorptive muffler problem. They also understand how to apply physics-based solutions to real-world engineering problems

24th February 2023 : Decoding graph construction in Kaldi by Saurabh Kumar

24th February 2023 : Decoding graph construction in Kaldi by Saurabh Kumar

Talk summary:

- In this session, we will briefly discuss about the decoding graph creation in Kaldi.

9th December 2022 : An efficient method to solve transcendental equations by Veerababu Dharanalakota

9th December 2022 : An efficient method to solve transcendental equations by Veerababu Dharanalakota

Talk summary:

- A transcendental function is defined as a function that is not algebraic and cannot be expressed in terms of a finite sequence of algebraic operations. You will encounter transcendental equations in many engineering problems. The best example of a transcendental equation is sin(x) = x. There are no standard methods to solve equations of this category. Most of the researchers use iterative methods like Bisection, Newton Raphson, etc. In this talk we are going to see an alternate and efficient method to solve transcendental equations.

25th November 2022 : Attention in End to end speech recognition systems by Sathvik

25th November 2022 : Attention in End to end speech recognition systems by Sathvik

Talk summary:

- Attention was used in 2015, and since then it has been a prominent formulation to learn alignments in end to end speech recognition. I will first introduce the task of speech recognition and go over how attention is incorporated.

18th November 2022 : Detection of Overlapped Speech by V Kartikeya

18th November 2022 : Detection of Overlapped Speech by V Kartikeya

Talk summary:

- Automatic Speech Recognition systems, Speaker Diarization systems etc, do not respond very well when there are more than one speakers speaking at the same time. In this project, a neural network is built to classify overlapped speech segments and non overlapped speech segments. Dataset was prepared by mixing audios of single speaker speech that was separated based on labels. MFCCs were extracted for frames of different lengths and input to the model. A CNN model was trained on the MFCCs with accuracy and precision as metrics.

28th October 2022 : Speech based age classification by MAREDDY SAI KRISHNA REDDY

28th October 2022 : Speech based age classification by MAREDDY SAI KRISHNA REDDY

Talk summary:

- Using Librosa converted the train and test audio files into mel-spectrogram images. These images are given to the Resnet-34 neural network.

16th September 2022 : Multilingual Sentence Representation & its applications by Abhayjeet Singh

16th September 2022 : Multilingual Sentence Representation & its applications by Abhayjeet Singh

Talk summary:

- Discussing the need and applications of Multilingual sentence respresentations. And what techniques and learning methods are used to learn these language independent sentence respresentations.

9th September 2022 : Sequence to Sequence Model by POOJA V H

9th September 2022 : Sequence to Sequence Model by POOJA V H

Talk summary:

- Increasing acquisition of digitization over the information storing and processing in our daily lives has increased the demand of digitization in multiple facts including in investigation processes as well. Over the past years machine translation, text summarization, and image captioning has become a topic of research. Various techniques of Natural Language Processing (NLP) enabling researchers to generate efficient results for a wide spectrum of documents. Seq2Seq Architecture with RNN is used to perform the mentioned machine translation, text summarization etc. Sequence-to-sequence models are best suited for tasks revolving around generating new sentences depending on a given input, such as summarization, translation, or generative question answering.

26th August 2022 : Mock presentation for INTERSPEECH by Sathvik

26th August 2022 : Mock presentation for INTERSPEECH by Sathvik

Talk summary:

- It will be a 15 mins presentation for our accepted paper in INTERSPEECH 2022 - Streaming model for Acoustic to Articulatory Inversion with transformer networks

12th August 2022 : Working for English Gyani Project during Summer Internship by Chinmoi Das

12th August 2022 : Working for English Gyani Project during Summer Internship by Chinmoi Das

Talk summary:

- English Gyani App is designed to help a user learn English Grammar and Pronunciation. To teach Grammar, with the help of a teacher, Grammar lessons for various English topics is developed. This large data, corresponding to various topics is created on google docs which is to be incorporated in the app backend. Since the format and structure in the lessons in doc are free flowing and do not always adhere to a particular structure, there is a need to have a consistent format for all kinds of topics covered. This content is formatted into 4 fixed types in excel sheets, which will enable convenient conversion of Grammar content into JSON format and ultimately to the backend of the app. This talk is a brief overview of the involved process and the things learnt during it.

5th August 2022 : Neurolinguistics: Aphasia: The Growing Understanding by Sharmistha Chakrabarti

5th August 2022 : Neurolinguistics: Aphasia: The Growing Understanding by Sharmistha Chakrabarti

29th July 2022 : Speaker Diarization by Kushagra Parmeshwar

29th July 2022 : Speaker Diarization by Kushagra Parmeshwar

Talk summary:

- Speaker diarization is the process of splitting an audio stream /clip into a number of homogenous segments, specifically governed by the number of people involved in the conversation. In a nutshell, it answers the question "who spoke when?". We have tried to implement a simple diarizer that would help us avoid manual transcription of conversations involving two people, that have taken place in many different languages spoken nation-wide. A typical diarizer usually comprises of the following steps: 1) voice activity detection 2)speech segmentation 3)feature extraction 4) clustering, followed by labeling them. ( The number of clusters should be equal to the number of speakers ideally) 5)The final step, transcribing the conversation. The diarizer implemented by us uses various modules that have already been built by other people. A large part of it is done with the help of the library speechbrain. A small code has further been added to evaluate the diarization error rate of the built system ,given the manual transcription in a csv file.

22nd July 2022 : Application of Digital Games for Speech Therapy in Children: A Systematic Review of Features and Challenges by Betty Kurian

22nd July 2022 : Application of Digital Games for Speech Therapy in Children: A Systematic Review of Features and Challenges by Betty Kurian

Talk summary:

- Treatment of speech disorders during childhood is essential. Many technologies can help speech and language pathologists (SLPs) to practice speech skills, one of which is digital games. This study aimed to systematically investigate the games developed to treat speech disorders and their challenges in children. The articles in which a digital game was developed to treat speech disorders in children were included in the study. Then, the features of the designed games and their challenges were extracted from the studies. In these articles, 59.25% of the games had been developed in English language and children with hearing impairments had received much attention from researchers compared to other patients. Also, the Mel-Frequency Cepstral Coefficients (MFCC) algorithm and the PocketSphinx speech recognition engine had been used more than any other speech recognition algorithm and tool. The evaluation of games showed a positive effect on children’s satisfaction, motivation, and attention during speech therapy exercises. The biggest barriers and challenges mentioned in the studies included sense of frustration, low self-esteem after several failures in playing games, environmental noise, contradiction between games levels and the target group’s needs, and problems related to speech recognition. The results of this study showed that the games positively affect children’s motivation to continue speech therapy, and they can also be used as the SLPs’ aids. Before designing these tools, the obstacles and challenges should be considered, and also, the solutions should be suggested.

15th July 2022 : Age classification of ALS/PD patients from their dysarthric speech by Stuti Prasad

15th July 2022 : Age classification of ALS/PD patients from their dysarthric speech by Stuti Prasad

Talk summary:

- ALS and PD are neuro-logically degenerative diseases whose study can help offer an opportunity to help researchers find better ways to safely detect, treat, or prevent ALS/PD and therefore hope for individuals now and in the future. This work focuses on determining patient\'s age based on their speech, since the disease leads to locomotive issues and thus dysarthric speech produces distinctive features to be studies. The use of i-vectors along with advanced plda has produced results with accuracy of 74.63%.As a future scope, by quantifying the extent of damage to the voice box,a tool can be developed to help people with severely damaged or absent voice boxes, by converting their whisper-like speech into normal-sounding speech.

8th July 2022 : Hindi Language Modelling by Ayush Raj

8th July 2022 : Hindi Language Modelling by Ayush Raj

Talk summary:

- Next word prediction for Hindi Language. Presentation of work done from dataset preparation, preprocessing and modelling.

1st July 2022 : Automatic detection of mismatched audio/text pair in Marathi by Nancy Meshram

1st July 2022 : Automatic detection of mismatched audio/text pair in Marathi by Nancy Meshram

Talk summary:

- The purpose of this project was to detect the mismatch between the audio/text pairs. For this 1D CNN model was used so that we can classify whether each of the audio/text pair is matched or not.

24th June 2022 : Error Correction of Hindi OCR by Atul Raj

24th June 2022 : Error Correction of Hindi OCR by Atul Raj

Talk summary:

- Optical Character Recognition(OCR) is the process to translate paper-based books into digital e-books. Output from OCR systems are erroneous and inaccurate as they produce misspellings in the recognized text especially when the source document is of low printing quality.Our problem is bifurcated into two major issues, i) Error detection ii) Error correction . Our focus language for this project is Hindi; The majority of Indian sripts are composed in two dimensions, which distinguishes them from English sripts.As a result, the methods developed for Roman scripts do not apply directly to Indian sripts, we face majorly two problems, the poor image quality and the inability of the OCR to extract the correct text due to the highly inflectional characteristic of the language; we used lookup dictionary for error detection and BERT for error correction.

17th June 2022 : Analysis of Pause Pattern in Kannada and Bengali Subjects having Amyotrophic Lateral Sclerosis or Parkinson’s Disease by Tanuka Bhattacharjee

17th June 2022 : Analysis of Pause Pattern in Kannada and Bengali Subjects having Amyotrophic Lateral Sclerosis or Parkinson’s Disease by Tanuka Bhattacharjee

Talk summary:

- We analyze the differences in the speaking and pausing pattern among Amyotrophic Lateral Sclerosis (ALS) patients, Parkinson’s disease (PD) patients and healthy subjects during spontaneous speech and image description utterances in native Kannada and Bengali languages. To the best of our knowledge, no such analysis has yet been conducted in the Indian language scenario. Moreover, ALS and PD speech-pause pattern comparison has not been done in any language whatsoever. Another contribution of this work lies in inter-language comparison of the speech-pause pattern for the three subject groups. All these analyses consider cognitive, prosodic and breath pauses. Particularly, four features are studied - pause time fraction, transition frequency, pause duration and speech segment duration.

10th June 2022 : Blind word error rate prediction using ASR features by Kunal Sah

10th June 2022 : Blind word error rate prediction using ASR features by Kunal Sah

Talk summary:

- An automatic speech recognition (ASR) system decodes any speech signal. Measuring the quality of automatic speech recognition (ASR) systems is critical for creating user-satisfying voice-driven applications. Word Error Rate (WER) has been traditionally used to evaluate the ASR system's quality. WER also gives an idea about the correctness of the text predicted by any ASR system (the higher the WER, the lower the confidence that the predicted text is correct). Computation of WER requires Target transcription. Hence, for unlabeled data, WER can't be computed directly. Our goal is to predict audio samples' WER without their target transcription in this project. Using a pre-trained ASR system, different features of the audio samples can be computed (for example, N-best Hypothesis, or word level confidences of most probable hypothesis, or Posterior Distribution of phonemes); these features can then be further processed to get a close approximation of the WER. We used Root Mean Square Error (RMSE) between actual WER and predicted WER as a Loss function. We also used the correlation coefficient (CC) and Mean Absolute Error (MAE) between predicted WER and actual WER of audio samples as an evaluation metric to evaluate the model's performance. In our work, we have worked with three different ASR trained on Clean, Noisy, and Reverb Speech Data and two ASR features, namely the N-best Hypothesis and word-level confidence of the most probable hypothesis to predict the WER using different deep learning methods.

3rd June 2022 : Selection of acoustically similar sentences based on phone error rate in the context of ASR by Saurabh Kumar

3rd June 2022 : Selection of acoustically similar sentences based on phone error rate in the context of ASR by Saurabh Kumar

Talk summary:

- For many languages, state-of-the-art ASR systems are reported to perform poorly due to the lack of acoustically and phonetically rich speech data available for system building. Even for resource-rich languages such as English, little efforts have been made to finding an efficient method to select training data similar to the testing conditions. Instead, state-of-the-art ASR systems are data hungry and require lots of speech data for training. Therefore, data selection plays a crucial role in the development of robust and computationally efficient ASR systems. In the last few years, several methods have been reported that ensure both acoustic and phonetic richness of the speech data. In this study, several recently reported data selection methods have been explored and efforts have been made to improve them.

27th May 2022 : WER–BERT: Automatic WER Estimation with BERT in a Balanced Ordinal Classification Paradigm by Abhishek Kumar

27th May 2022 : WER–BERT: Automatic WER Estimation with BERT in a Balanced Ordinal Classification Paradigm by Abhishek Kumar

Talk summary:

- Automatic Speech Recognition (ASR) systems are evaluated using Word Error Rate (WER), which is calculated by comparing the number of errors between the ground truth and the transcription of the ASR system. This calculation, however, requires manual transcription of the speech signal to obtain the ground truth. Since transcribing audio signals is a costly process, Automatic WER Evaluation (e-WER) methods have been developed to automatically predict the WER of a speech system by only relying on the transcription and the speech signal features. While WER is a continuous variable, previous works have shown that positing e-WER as a classification problem is more effective than regression. However, while converting to a classification setting, these approaches suffer from heavy class imbalance. In this paper, we propose a new balanced paradigm for e-WER in a classification setting. Within this paradigm, we also propose WER-BERT, a BERT based architecture with speech features for e-WER. Furthermore, we introduce a distance loss function to tackle the ordinal nature of e-WER classification. The proposed approach and paradigm are evaluated on the Librispeech dataset and a commercial (black box) ASR system, Google Cloud’s Speech-to-Text API. The results and experiments demonstrate that WER-BERT establishes a new state-of-the-art in automatic WER estimation.

20th May 2022 : Unsupervised representation learning for speaker verification. by Prajesh Rana

20th May 2022 : Unsupervised representation learning for speaker verification. by Prajesh Rana

Talk summary:

- The objective of speaker verification is authentication of a claimed identity from measurements on the voice signal. For speaker verification I am exploring contrastive loss based self supervised learning(SSL). My work on speaker verification consist of two parts. In the first part I trained the Self supervised model and in second part I am using pretrained model as a feature extractor and trained the PLDA as a backend model. I will compare my results with the TDNN-PLDA and TDNN-ECAPA algorithms.

13th May 2022 : Extracting features using Self Supervised learning using ASR by Abhishek

13th May 2022 : Extracting features using Self Supervised learning using ASR by Abhishek

Talk summary:

- Automatic Speech Recognition, or ASR for short, is a technique of providing transcription to a speech or in simple terms ASR is also termed as Speech-to-Text conversion. So, here we aim to learn a feature that not only utilizes data but are also robust to noise. To support this argument for our target feature I have evaluated the performance of MFCC and F-bank features with the Features learnt using wav2vec] which is learnt using a self- supervised representation learning, for ASR. The metric used for comparison is PER and CER.

6th May 2022 : A Stage Match For Query-By-Example Spoken Term Detection Based On Structure Information Of Query by Deekshitha G

6th May 2022 : A Stage Match For Query-By-Example Spoken Term Detection Based On Structure Information Of Query by Deekshitha G

Talk summary:

- The state-of-the-art of query-by-example spoken term detection (QbE-STD) strategies are usually based on segmental dynamic time warping (S-DTW). However, the sliding window in S-DTW may separate signal of a word into different segments and produce many illegal candidates required to be compared with the query, which significantly reduce the accuracy and efficiency of detection. This paper propose a stage match strategy based on the structure information of the query, represented with the unvoiced-voiced attribute of the portions in itself. The strategy first locates potential candidates with similar structure against the query in utterances,and further matches the query with Type-Location DTW (TLDTW), which is a modified DTW with the constraints of pronunciation types and relative positions of paired frames in the voiced sub-segments. Experiments on AISHELL-1 Corpus showed that the proposed approach achieved a relative improvement S-DTW and speeded up the retrieval.

29th April 2022 : Paper review by Sathvik Udupa

29th April 2022 : Paper review by Sathvik Udupa

Talk summary:

- 1. Understanding the Role of Self Attention for Efficient Speech Recognition Transformer neural networks are increasingly used in automatic speech recognition (ASR). This work investigates the inner working of such networks in ASR and introduces techniques to reduce recognition latency. 2. Chunked Autoregressive GAN for Conditional Waveform Synthesis Generative adversarial networks (GAN) based neural vocoders have been performing well in speech synthesis in recent years. The authors show that these networks are unable to generate accurate pitch and periodicity, and introduce an autoregressive GAN based vocoder to tackle the issues.

15th April 2022 : Broadcasted Residual Learning for Efficient Keyword Spotting by Siddarth

15th April 2022 : Broadcasted Residual Learning for Efficient Keyword Spotting by Siddarth

Talk summary:

- We present a broadcasted residual learning method to achieve high accuracy with small model size and computational load. Our method configures most of the residual functions as 1D temporal convolution while still allows 2D convolution together using a broadcasted-residual connection that expands temporal output to frequency-temporal dimension.

25th March 2022 : Wave Equation and Fundamentals by Veerababu Dharanalakota

25th March 2022 : Wave Equation and Fundamentals by Veerababu Dharanalakota

Talk summary:

- It is necessary to know the mathematical description of the speech signals (sound waves) not just for the reason the study exists but for it has a potential to mimic the reality under certain conditions. In order to do so, it is necessary to know the derivation of wave equation and the underlying assumptions. This talk covers the derivation of wave equation from the fundamental of fluid dynamics equations: continuity, momentum and energy equations, which in turn are derived from natural laws. Further, the talk covers the general terminology used in the study of sound.

11th March 2022 : Pnoi: Development and Challenges by Syed Fahad

11th March 2022 : Pnoi: Development and Challenges by Syed Fahad

Talk summary:

- Discussion of the developments in regards to creation of a specialized digital stethoscope called Pnoi for capturing lung and breathing sounds. These sounds can be used for medical diagnosis at an substantially cheaper cost than the current standards.

4th March 2022 : Large Text Corpus Creation using Web Scraping for Language Modelling by Hemantha Krishna Bharadwaj

4th March 2022 : Large Text Corpus Creation using Web Scraping for Language Modelling by Hemantha Krishna Bharadwaj

Talk summary:

- The collection of large datasets for training language models requires the use of techniques that extract data from the world wide web in a systematic manner. Collectively known as web scraping, these techniques have been well established by previous research, but there is little research on their use for the collection of data other than that in the English language. This talk will detail improved methods of extracting domain-specific non-English language data from the internet using a combination of HTML parsing libraries and frameworks in Python. The proposed methodology can be utilized to provide large non-English language text datasets in an automated fashion.

18th February 2022 : Attention and Transformers by Abhayjeet Singh

18th February 2022 : Attention and Transformers by Abhayjeet Singh

Talk summary:

- Intuitive and mathematical understanding of Attention and Transformer Networks

11th February 2022 : wav2vec 2.0 by Siddarth C

11th February 2022 : wav2vec 2.0 by Siddarth C

Talk summary: