LAB EVENTS > EVENTS

Anjali Jayakumar's MTech by Research

Speech Based Low-Complexity Classification of Patients with Amyotrophic Lateral Sclerosis from Healthy Controls: Exploring the Role of Hypernasality

Amyotrophic Lateral Sclerosis (ALS) is a progressive neurodegenerative disease that affects motor neurons, leading to muscle weakness, atrophy, and loss of speech. As the disease progresses, speech impairments such as

hypernasality become prominent, often making communication difficult and impacting quality of life. Currently, ALS monitoring relies on time-consuming, invasive methods, leaving a gap for faster, non-invasive solutions.

This study explores the potential of using speech, particularly hypernasality, to distinguish ALS patients from healthy individuals. By leveraging simple, low-complexity models and speech features like Mel-frequency cepstral coefficients (MFCC) and HuBERT representations, the research focuses on creating an efficient tool for ALS detection without requiring large datasets. The work introduces a nasality-based classification model that trains on healthy speech data to identify nasal and non-nasal sounds. By analyzing ALS speech through nasality, the model successfully distinguishes ALS from healthy speech with good accuracy. The findings show that nasality serves as a reliable marker for ALS, and low-complexity models can achieve competitive accuracy even with smaller datasets. This approach paves the way for developing accessible, scalable tools for monitoring ALS in everyday settings.

Research Guides : Prasanta Kumar Ghosh

Publications

- Anjali Jayakumar, Tanuka Bhattacharjee, Seena Vengalil, Yamini Belur, Nalini Atchayaram and Prasanta Kumar Ghosh, "Low complexity model with single dimensional feature for speech based classification of amyotrophic lateral sclerosis patients and healthy individuals", accepted in Proc. International Conference on Signal Processing and Communications (SPCOM), Bangalore, India, Jul. 2024, page(s): 1-5. [pdf][slides]

- Anjali Jayakumar, Tanuka Bhattacharjee,Seena Vengalil, Yamini Belur, Nalini Atchayaram, Keerthipriya M, Darshan Chikktimmegowda and Prasanta Kumar Ghosh, "Classification between patients with Amyotrophic Lateral Sclerosis and healthy individuals using hypernasality in speech: A low complexity approach", accepted in Proc. National Conference on Communications (NCC), Delhi, India, Mar. 2025, page(s): 1-6. [pdf][slides]

Tanuka Bhattacharjee's PhD

Characterization and Enhancement of Dysarthric Speech for Amyotrophic Lateral Sclerosis: A Source-Filter Perspective

Dysarthria is a motor speech disorder that affects different aspects of speech functions, like respiration, phonation, articulation, prosody, and resonance. It progressively compromises the intelligibility and naturalness of speech, making vocal communication extremely difficult for the affected individuals. This thesis explores the source-filter angle of dysarthric speech

for characterization and enhancement of this type of speech. We particularly focus on dysarthria caused by the incurable neurodegenerative disease Amyotrophic lateral sclerosis (ALS).

The source-filter modelling captures the physiological processes of speech production, and hence, facilitates a detailed analysis of how ALS-related dysarthria affects these processes. Thus, this research direction can enhance our understanding of the nature and behavior of the disease, help in targeted automatic assessment and monitoring, as well as lead to the development of physiologically motivated intelligent assistive systems for the patients. The major contributions of the thesis are - (1) analysis of different source, filter, and overall cues for ALS vs healthy classification and dysarthria severity classification in ALS, (2) development of source and filter characteristics based transfer learning approaches for dysarthria severity classification in ALS, (3) analysis of the degree of source-level and filter-level inter-speaker differences existing among the ALS patients, and (4) analysis of the role of source and filter in enhancing dysarthric speech specific to ALS.

Google Scholar

Research Guides : Prasanta Kumar Ghosh

Publications

- Upendra Vishwanath Y. S., Tanuka Bhattacharjee, Deekshitha G, Sathvik Udupa, Kumar Chowdam Venkata Thirumala, Madassu Keerthipriya, Darshan Chikktimmegowda, Dipti Baskar, Yamini Belur, Seena Vengalil, Atchayaram Nalini and Prasanta Kumar Ghosh, "Comparison of acoustic and textual features for dysarthria severity classification in Amyotrophic Lateral Sclerosis", accepted in Proc. Interspeech, Rotterdam, The Netherlands, 2025, Page(s): 803-807. [pdf]

- Tanuka Bhattacharjee, Seena Vengalil, Yamini Belur, Nalini Atchayaram and Prasanta Kumar Ghosh, "Inter-speaker acoustic differences of sustained vowels at varied dysarthria severities for Amyotrophic Lateral Sclerosis", accepted in Journal of Acoustical Society of America(JASA), Dec. 2024, volume 4, issue 12, page(s): 125203. [pdf]

- Neelesh Samptur, Tanuka Bhattacharjee, Anirudh Chakravarty K, Seena Vengalil,Yamini Belur, Atchayaram Nalini and Prasanta Kumar Ghosh, "Exploring syllable discriminability during diadochokinetic task with increasing dysarthria severity for patients with amyotrophic lateral sclerosis", accepted in Proc. Interspeech, Kos Island, Greece, Sept. 2024, page(s): 4114-4118. [pdf][slides]

- Chowdam Venkata Thirumala Kumar, Tanuka Bhattacharjee, Seena Vengalil, Saraswati Nashi, Madassu Keerthipriya, Yamini Belur, Atchayaram Nalini and Prasanta Kumar Ghosh, "Spectral analysis of vowels and fricatives at varied levels of dysarthria severity for Amyotrophic Lateral Sclerosis", accepted in Proc. International Conference on Acoustics, Speech and Signal Processing (ICASSP), Seoul, Republic of Korea, Apr. 2024, page(s): 12767-12771. [pdf][poster]

- Tanuka Bhattacharjee, Anjali Jayakumar, Yamini Belur, Atchayaram Nalini, Ravi Yadav and Prasanta Kumar Ghosh, "Transfer learning to aid dysarthria severity classification for patients with amyotrophic lateral sclerosis", accepted in Proc. Interspeech, Dublin, Ireland, Aug. 2023, page(s): 1543-1547. [pdf][poster]

- Chowdam Venkata Thirumala Kumar, Tanuka Bhattacharjee, Yamini Belur , Atchayaram Nalini, Ravi Yadav and Prasanta Kumar Ghosh, "Classification of multi-class vowels and fricatives from patients having amyotrophic lateral sclerosis with varied levels of dysarthria severity", accepted in Proc. Interspeech, Dublin, Ireland, Aug. 2023, page(s): 146-150. [pdf]

- Tanuka Bhattacharjee, Yamini Belur, Atchayaram Nalini, Ravi Yadav and Prasanta Kumar Ghosh, "Exploring the role of fricatives in classifying healthy subjects and patients with Amyotrophic Lateral Sclerosis and Parkinson’s Disease", accepted in Proc. IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 2023, Page(s): 1-5. [pdf][slides][poster]

- Tanuka Bhattacharjee, Chowdam Venkata Thirumala Kumar, Yamini Belur, Atchayaram Nalini, Ravi Yadav and Prasanta Kumar Ghosh, "Static and dynamic source and filter cues for classification of amyotrophic lateral sclerosis patients and healthy subjects", accepted in Proc. International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, Jun. 2023, page(s): 1-5. [pdf][slides][poster]

- Tanuka Bhattacharjee, Jhansi Mallela, Yamini Belur, Nalini Atchayaram, Ravi Yadav, Pradeep Reddy, Dipanjan Gope and Prasanta Kumar Ghosh, "Source and vocal tract cues for speech-based classification of patients with Parkinson’s disease and healthy subjects", accepted in Proc. Interspeech, Brno, Czechia, Sept. 2021, page(s): 2961-2965. [pdf][slides]

- Tanuka Bhattacharjee, Jhansi Mallela, Yamini Belur, Nalini Atchayaram, Ravi Yadav, Pradeep Reddy, Dipanjan Gope and Prasanta Kumar Ghosh, "Effect of noise and model complexity on detection of amyotrophic lateral sclerosis and Parkinson’s disease using pitch and MFCC", accepted in Proc. International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, Ontario, Canada, Jun. 2021, page(s): 7313-7317. [pdf][slides][poster]

Shivani Yadav's PhD

Analysis of vocal sounds in asthmatic patients

Around 334 million people have asthma worldwide. Asthma is an inflammatory disease of the airways which causes cough, breathlessness, chest tightness, and other peculiar sounds during breathing. The golden standard test spirometry is used to diagnose and monitor asthma. Spirometry is a lung function test that measures the time and volume of air a person can exhale after a deep inhalation. In general, patients repeat. the test multiple times to get accurate readings, making it very time-consuming, and strenuous, especially for children and older people.

A spirometer is also an expensive and bulky device that is not suitable for a home monitoring setup. Hence, a need for an easy and fast method exists.

Complexity of this mapping depends on a number of factors. These include the kind of representations used in the acoustic and articulatory spaces. Typically these representations capture both linguistic and paralinguistic aspects in speech. How each of these aspects contributes to the complexity of the mapping is unknown. These representations and, in turn, the acoustic-articulatory mapping are affected by the speaking rate as well. The nature and quality of the mapping varies across speakers. Thus, complexity of mapping also depends on the amount of the data from a speaker as well as number of speakers used in learning the mapping function. Further, how the language variations impact the mapping requires detailed investigation. This thesis analyzes few of such factors in detail and develops neural network based models to learn mapping functions robust to many of these factors. Sound-based analysis can be one such method. The motivation behind using sounds for monitoring and diagnosis of asthma originates from the sound production mechanism. In literature, non-speech sounds, namely, cough and breath recorded at the chest, have been explored. However, the analysis of speech and non-speech sounds recorded at the mouth is least investigated. Therefore, the work done in this thesis addresses this problem. For the analysis, we started with two tasks, namely classification and spirometry prediction for each sound category. Therefore, the thesis is divided into two parts.

Analysis of speech sounds: For speech sound analysis, classification between the asthmatic and healthy subjects and spirometry prediction tasks have been performed. Results of the classification and spirometry prediction task show that /oo/ (as in 'Home') is the best for the classification task, whereas /ee/ (as in 'Meet') is best for the spirometry prediction. Results of the classification task suggest that Mel-frequency cepstral coefficients (MFCC) statistics carry maximum information for the discrimination in the case of all speech sounds considered in this work. Spirometry variables prediction task results show that the low frequency carries more information in best-performing sound /ee/.

Analysis of non-speech sounds: Classification performed with non-speech sounds, cough, and breath, indicates that MFCC's are best, but interestingly, static MFCC coefficients are more informative than the velocity and acceleration coefficients. Breath performs the best for the classification task in the non-speech sound group. Further analysis of breath signal shows that discriminative information for classification is not uniform in the entire breath signal. Interestingly, the middle 50% of the breath signal carries maximum information for the classification. To extract the middle 50% of a breath, prior knowledge of breath boundaries is required. Therefore, we developed an unsupervised breath segmentation algorithm using dynamic programming. Classification results using predicted boundaries are found to be on par with the ground truth boundaries. Comparison of speech sounds and breath for the classification tasks shows that breath sound outperformed speech sounds. Experiments conducting for spirometry prediction tasks using non-speech sound indicate that breath performance is better than cough. In the spirometry prediction task, speech sounds outperformed the non-speech sounds.

A linear discriminant-based analysis has been carried out to determine what kind of spectral changes occur in an asthmatic patient's sound before and after taking a bronchodilator. For this task, breath sounds have been chosen. We observed that frequency bands 400Hz-500Hz and 1480Hz -1900Hz are more sensitive to bronchodilation in breath sound.

Google Scholar

Research Guides : Prasanta Kumar Ghosh, Dipanjan Gope, Uma Maheshwari K

Aravind Illa's PhD

Acoustic-Articulatory Mapping: Analysis and Improvements with Neural Network Learning Paradigms

Human speech is one of many acoustic signals we perceive, which carries linguistic and paralinguistic (e.g: speaker identity, emotional state) information. Speech acoustics are produced as a result of different temporally overlapping gestures of speech articulators (such as lips, tongue tip, tongue body, tongue, dorsum, velum, and larynx) each of which

regulates constriction in different parts of the vocal tract. Estimating speech acoustic representations from articulatory movements is known as articulatory-to-acoustic forward (AAF) mapping i.e., articulatory speech synthesis. While estimating articulatory movements back from the speech acoustics is known as acoustic-to-articulatory inverse (AAI) mapping. These acoustic-articulatory mapping functions are known to be complex and nonlinear.

Complexity of this mapping depends on a number of factors. These include the kind of representations used in the acoustic and articulatory spaces. Typically these representations capture both linguistic and paralinguistic aspects in speech. How each of these aspects contributes to the complexity of the mapping is unknown. These representations and, in turn, the acoustic-articulatory mapping are affected by the speaking rate as well. The nature and quality of the mapping varies across speakers. Thus, complexity of mapping also depends on the amount of the data from a speaker as well as number of speakers used in learning the mapping function. Further, how the language variations impact the mapping requires detailed investigation. This thesis analyzes few of such factors in detail and develops neural network based models to learn mapping functions robust to many of these factors.

Electromagnetic articulography (EMA) sensor data has been used directly in the past as articulatory representations (ARs) for learning the acoustic-articulatory mapping function. In this thesis, we address the problem of optimal EMA sensor placement such that the air-tissue boundaries as seen in the mid-sagittal plane of the real-time magnetic resonance imaging (rtMRI) is reconstructed with minimum error. Following optimal sensor placement work, acoustic-articulatory data was collected using EMA from 41 subjects with speech stimuli in English and Indian native languages (Hindi, Kannada, Tamil and Telugu) which resulted in a total of ~23 hours of data, used in this thesis. Representations from raw waveform are also learnt for AAI task using convolutional and bidirectional long short term memory neural networks (CNN-BLSTM), where the learned filters of CNN are found to be similar to those used for computing Mel-frequency cepstral coefficients (MFCCs), typically used for AAI task. In order to examine the extent to which a representation having only the linguistic information can recover ARs, we replace MFCC vectors with one-hot encoded vectors representing phonemes, which were further modified to remove the time duration of each phoneme and keep only phoneme sequence. Experiments with phoneme sequence using attention network achieve an AAI performance that is identical to that using phoneme with timing information, while there is a drop in performance compared to that using MFCC.

Experiments to examine variation in speaking rate reveal that, the errors in estimating the vertical motion of tongue articulators from acoustics with fast speaking rate, is significantly higher than those with slow speaking rate. In order to reduce the demand for data from a speaker, low resource AAI is proposed using a transfer learning approach. Further, we show that AAI can be modeled to learn acoustic-articulatory mappings of multiple speakers through a single AAI model rather than building separate speaker-specific models. This is achieved by conditioning an AAI model with speaker embeddings, which benefits AAI in seen and unseen speaker evaluations. Finally, we show the benefit of estimated ARs in voice conversion application. Experiments revealed that ARs estimated from speaker independent AAI preserves linguistic information and suppress speaker-dependent factors. These ARs (from unseen speaker and language) are used to drive target speaker specific AAF to synthesis speech, which preserves linguistic information and target speaker’s voice characteristics.

Google Scholar

Research Guides : Prasanta Kumar Ghosh

Abinay Reddy's MTech by Research

Speaker verification using whispered speech

Like neutral speech, whispered speech is one of the natural modes of speech production, and it is often used by speakers in their day-to-day life. For some people, such as laryngectomees, whispered speech is the only mode of communication. Despite the absence of voicing in whispered speech and difference in characteristics compared to the neutral speech, previous works in the literature demonstrated that whispered speech contains dequate information about the content and the speaker.

In recent times, virtual assistants have become more natural and widespread. This led to an increase in the scenarios, where the device has to detect the speech and verify the speaker even if the speaker whispers. Due to the noise-like characteristics, detecting whispered speech is a challenge. On the other hand, a typical speaker verification system, where neutral speech is used for enrolling the speakers but whispered speech for testing, often performs poorly due to the difference in acoustic characteristics between the whispered and the neutral speech. Hence, the aim of this thesis is two-fold: 1) develop a robust whisper activity detector specifically for speaker verification task, 2) improve whispered speech based speaker verification performance.

The contributions in this thesis lie in whisper activity detection as well as whispered speech based speaker verification. It is shown how an Attention-based average pooling in a speaker verification model can be used to detect the whispered speech regions in noisy audio more accurately than the best of the baseline schemes available. For improving speaker verification using whispered speech, we proposed features based on formant gaps, and we showed that these features are more invariant to the modes of the speech compared to the best of the existing features. We also proposed two feature mapping methods to convert the whispered features to neutral features for speaker verification. In the first method, we introduced a novel objective function, based on cosine similarity, for training a DNN, used for feature mapping. In the second method, we iteratively optimized the feature mapping model using cosine similarity based objective function and the total variability space likelihood in the i-vector based background model. The proposed optimization provided a more reliable mapping from whispered features to neutral features resulting in an improvement of speaker verification equal error rate by 44.8% (relative) over an existing DNN based feature mapping scheme.

Google Scholar

Research Guides : Prasanta Kumar Ghosh

Publications

- Abinay Reddy Naini, Satyapriya Malla and Prasanta Kumar Ghosh, "Whisper activity detection using CNN-LSTM based attention pooling network trained for a speaker identification task", accepted in ICnterspeech 2020. [pdf][slides][presentation]

- Abinay Reddy Naini,Achuth Rao M V and Prasanta Kumar Ghosh, "Formant-gaps features for speaker verification using whispered speech", accepted in ICASSP 2019. [pdf][poster]

- Abinay Reddy Naini, Achuth Rao M V and Prasanta Kumar Ghosh, "Whisper to neutral mapping using cosine similarity maximization in i-vector space for speaker verification", accepted in Interspeech 2019. [pdf][poster]

- Abinay Reddy N, Achuth Rao M V, G. Nisha Meenakshi and Prasanta Kumar Ghosh, "Reconstructing neutral speech from tracheoesophageal speech", accepted in Interspeech 2018. [pdf][poster]

Achuth Rao's PhD

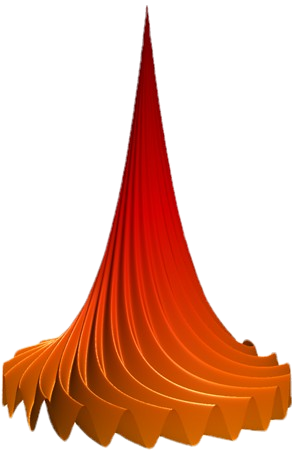

Probabilistic source-filter model of speech

The human respiratory system plays a crucial role in breathing and swallowing. However, it also plays an essential role in speech production, which is unique to humans. Speech production involves expelling air from the lungs. As the air flows from the lungs to the lips, some kinetic energy gets converted to sound. Different structures modulate the generated sound, which is finally radiated out of the lips. The speech consists of various information such as linguistic content, speaker identity, emotional

state, accent, etc. Apart from speech, there are various scenarios where the sound is generated in the human respiratory system. These could be due to abnormalities in the muscles, motor control unit, or the lungs, which can directly affect generated speech as well. A variety of sounds are also generated by these structures while breathing including snoring, Stridor, Dysphagia, and Cough. The source filter (SF) model of speech is one of the earlier models of speech production. It assumes that speech is a result of filtering an excitation or source signal by a linear filter. The source and filter are assumed to be independent. Even though the SF model represents the speech production mechanism, there needs to be a tractable way of estimating the excitation and the filter. The estimation of both of them given speech falls under the general category of signal deconvolution problem, and, hence, there is no unique solution. There are several variations of the source-filter model in the literature by assuming different structures on the source/filter. There are various ways to estimate the parameters of the source and the filter. The estimated parameters are used in various speech applications such as automatic speech recognition, text to speech, speech enhancement etc. Even though the SF model is a model of speech production, it is used in applications including Parkinson's Disease classification, asthma classification.

The existing source filter models show much success in various applications, however, we believe that the models mainly lack two respects. The first limitation is that these models lack the connection to the physics of sound generation or propagation. The second limitation of the current models is that they are not fully probabilistic. The inherent nature of the airflow is stochastic because of the presence of turbulence. Hence, probabilistic modeling is necessary to model the stochastic process. The probabilistic models come with several other advantages: 1) systematically inducing the prior knowledge into the models through probabilistic priors, 2) the estimation of the uncertainty of the model parameters, 3) allows sampling of new data points 4) evaluation of the likelihood of the observed speech.

We start with the governing equation of sound generation and use a simplified geometry of the vocal folds. We show that the sound generated by the vocal folds consists of two parts. The first part is because of the difference between the subglottal and supra glottal pressure difference. The second part is because of the sound generated by turbulence. The first kind is dominant in the voiced sounds, and the second part is dominant in the unvoiced sounds. We further assume the plane wave propagation in the vocal tract, and there is no feedback from the vocal tract on the vocal folds. The resulting model is the excitation passing through an all-pole filter, and the excitation is the sum of two signals. The first signal is quasi-periodic, and the shape of each cycle depends on the time-varying area of the glottis. The second part is stochastic because the turbulence is modeled as a white noise passed through a filter. We further convert the model into a probabilistic one by assuming the following distribution on the excitations and filters. We model the excitation using a Bernoulli Gaussian distribution. Filter coefficients are modeled using the Gaussian distribution. The noise distribution is also Gaussian. Given these distributions, the likelihood of the speech can be derived as a closed-form expression. Similarly, we impose an appropriate prior to the model’s parameters and make a maximum a posteriori estimation of the parameters. But the model assumption can be changed/approximated with respect to the application and resulting in different estimation procedures. To validate the model, we apply this model to seven applications as follows:

1. Analysis and Synthesis: This application is to understand the representation power of the model.

2. Robust GCI detection: This shows the usefulness of estimated excitation, and the probabilistic modeling helps to incorporate the second-order statistics for robust the excitation estimation.

3. Probabilistic glottal inverse filtering: This application shows the usefulness of the prior distribution on filters.

4. Neural speech synthesis: We show that the model’s reformulation with the neural network results in a computationally efficient neural speech synthesis.

5. Prosthetic esophageal (PE) to normal speech conversion: We use the probabilistic model for detecting the impulses in the noisy signal to convert the PE speech to normal speech.

6. Robust essential vocal tremor classification: The usefulness of robust excitation estimation in pathological speech such as essential vocal tremor.

7. Snorer group classification: Based on the analogy between voiced speech production and snore production, the derived model is applicable for snore signals. We also use the parameter of the model to classify the snorer groups.

Google Scholar

Research Guides : Prasanta Kumar Ghosh

Publications

- Achuth Rao M V, Yamini B K, Ketan J, Preetie Shetty A, Pal P, Shivashankar N, and Prasanta Kumar Ghosh, "Automatic Classification of Healthy Subjects and Patients With Essential Vocal Tremor Using Probabilistic Source-Filter Model Based Noise Robust Pitch Estimation", accepted in Journal of Voice, 2021. [pdf]

- Siddharth Subramani, Achuth Rao M V, Anwesha Roy, Prasanna Suresh Hegde, and Prasanta Kumar Ghosh, "SFNet: A Computationally Efficient Source Filter Model Based Neural Speech Synthesis", accepted in IEEE Signal Processing Letters, 2020. [pdf]

- Achuth Rao M V and Prasanta Kumar Ghosh, "Glottal inverse filtering using probabilistic weighted linear prediction", accepted in IEEE/ACM Transactions on Audio, Speech and Language Processing (TASLP), 2019. [pdf]

- Achuth Rao M V and Prasanta Kumar Ghosh, "PSFM - A Probabilistic Source Filter Model for Noise Robust Glottal Closure Instant Detection", accepted in IEEE/ACM Transactions on Audio, Speech and Language Processing (TASLP), 2018. [pdf]

- Achuth Rao M V, Shiny Victory and Prasanta Kumar Ghosh, "Effect of source filter interaction on isolated vowel-consonant-vowel perception", accepted in The Journal of the Acoustical Society of America, 2018. [pdf]

- Achuth Rao M V, Shivani Yadav and Prasanta Kumar Ghosh, "A dual source-filter model of snore audio for snorer group classification", accepted in Interspeech 2017. [pdf]

Mannem Renuka's M.Tech by research

Speech task-specific representation learning using acoustic-articulatory data

Human speech production involves modulation of the air stream by the vocal tract shape determined by the articulatory configuration. Articulatory gestures are often used to represent the speech units. It has been shown that the articulatory representations contain information complementary to the acoustics. Thus, a speech task could benefit from the representations derived from both acoustic and articulatory data. A typical acoustic

representation consists of spectral and temporal characteristics e.g., Mel Frequency Cepstral Coefficients (MFCCs), Line Spectral Frequencies (LSF), and Discrete Wavelet Transform (DWT). On the other hand, articulatory representations vary depending on how the articulatory movements are captured. For example, when Electro-Magnetic Articulography (EMA) is used, the recorded raw movements of the EMA sensors placed on the tongue, jaw, upper lip, and lower lip and tract variables derived from them have often been used as articulatory representations. Similarly, when real-time Magnetic Resonance Imaging (rtMRI) is used, articulatory representations are derived primarily based on the Air-Tissue Boundaries (ATB) in the rtMRI video. The low resolution and SNR of the rtMRI video makes the ATB segmentation challenging. Therefore, we propose various supervised ATB segmentation algorithms which include semantic segmentation, object contour detection using deep convolutional networks. The proposed approaches predict ATBs better than the existing baselines, namely, Maeda Grid and Fisher Discriminant Measure based schemes. We also propose a deep fully-connected neural network based ATB correction scheme as a post processing step to improve upon the predicted ATBs. However, articulatory data is not directly available in practice, unlike the speech recording. Thus, we also consider the articulatory representations derived from acoustics using an Acoustic-to-Articulatory Inversion (AAI) method.

Research Guides : Prasanta Kumar Ghosh

Publications

- Renuka Mannem and Prasanta Kumar Ghosh, "A deep neural network based correction scheme for improved air-tissue boundary prediction in real-time magnetic resonance imaging video", accepted in Computer Speech and Language, Elsevier,2020. [pdf]

- Renuka Mannem, Himajyothi Rajamahendravarapu, Aravind Illa and Prasanta Kumar Ghosh, "Speech rate task-specific representation learning from acoustic-articulatory data", accepted in Interspeech 2020. [pdf][slides][presentation]

- Renuka Mannem, Navaneetha Gaddam and Prasanta Kumar Ghosh, "Air-tissue boundary segmentation in real time Magnetic Resonance Imaging video using 3-D convolutional neural network", accepted in Interspeech 2020. [pdf][slides][presentation]

- Renuka Mannem, Himajyothi Rajamahendravarapu, Aravind Illa, Prasanta Kumar Ghosh, "Speech rate estimation using representations learned from speech with convolutional neural network", accepted in SPCOM 2020. [pdf]

- Renuka Mannem, Jhansi Mallela, Aravind Illa and Prasanta Kumar Ghosh, "Acoustic and articulatory feature based speech rate estimation using a convolutional dense neural network", accepted in Interspeech 2019, Graz, Austria. [pdf]

- Valliappan CA, Avinash Kumar, Renuka Mannem, Karthik Girija Ramesan and Prasanta Kumar Ghosh, "An improved air tissue boundary segmentation technique for real time magnetic resonance imaging video using segnet", accepted in IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 2019, pages: 5921-5925. [pdf]

- Renuka Mannem and Prasanta Kumar Ghosh, "Air-tissue boundary segmentation in real time magnetic resonance imaging video using a convolutional encoder-decoder network", accepted in IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 2019, pages: 5941-5945. [pdf]

- Renuka Mannem, Valliappan C A and Prasanta Kumar Ghosh, "A SegNet Based Image Enhancement Technique for Air-Tissue Boundary Segmentation in Real-Time Magnetic Resonance Imaging Video", accepted in National Conference on Communications (NCC) 2019, Bangalore, India, Pages: 1-6. [pdf]

- Valliappan CA, Renuka Mannem and Prasanta Kumar Ghosh, "Air-Tissue Boundary Segmentation in Real-Time Magnetic Resonance Imaging Video using Semantic Segmentation with Fully Convolutional Networks", accepted in Interspeech, Hyderabad, India, 2018, Page(s): 3132-3136. [pdf]

Chiranjeevi's PhD

Pronunciation assessment and semi-supervised feedback prediction for spoken English tutoring

Spoken English pronunciation quality is often influenced by the nativity of a learner, for whom English is the second language. Typically, the pronunciation quality of a learner depends on the degree of following four sub-qualities: 1) phoneme quality 2) syllable stress quality 3) intonation quality, and 4) fluency. In order to achieve a good pronunciation quality,

learners need to minimize their nativity influences in each of the four sub-qualities, which can be achieved with effective spoken English tutoring methods. However, these methods are expensive as they require highly proficient English experts. In cases, where a cost-effective solution is required, it is useful to have a tutoring system which assesses a learner's pronunciation and provides feedback in each of the four sub-qualities to minimize nativity influences in a manner similar to that of a human expert. Such kind of systems are also useful for learners who can not access high quality tutoring due to their demographic and physical constraints. In this thesis, several methods are developed to assess pronunciation quality and provide feedback for such a spoken English tutoring system for Indian learners.

Google Scholar

Research Guides : Prasanta Kumar Ghosh

Publications

- Chiranjeevi Yarra, Kausthubha N K and Prasanta Kumar Ghosh, "SPIRE-ABC: An online tool for acoustic-unit boundary correction (ABC) via crowdsourcing", accepted in Oriental COCOSDA 2020. [pdf]

- Avni Rajpal, Achuth Rao M V, Chiranjeevi Yarra, Ritu Aggarwal, Prasanta Kumar Ghosh, "Pseudo-likelihood correction technique for low-resource accented ASR", accepted in IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) 2020.

- Chiranjeevi Yarra, Ritu Aggarwal, Avni Rajpal and Prasanta Kumar Ghosh, "Indic TIMIT and Indic English lexicon: A speech database of Indian speakers using TIMIT stimuli and a lexicon from their mispronunciations", accepted in Oriental COCOSDA 2019.

- Chiranjeevi Yarra, Aparna Srinivasan, Chandana Srinivasa, Ritu Aggarwal and Prasanta Kumar Ghosh, "voisTUTOR corpus: A speech corpus of Indian L2 English learners for pronunciation assessment", accepted in Oriental COCOSDA 2019.

- Chiranjeevi Yarra and Prasanta Kumar Ghosh, "voisTUTOR: Virtual Operator for Interactive Spoken English TUTORing", accepted in 8th Workshop on Speech and Language Technology in Education, 2019.

- Chiranjeevi Yarra, Manoj Kumar Ramanathi and Prasanta Kumar Ghosh, "Comparison of automatic syllable stress detection quality with time-aligned boundaries and context dependencies", accepted in 8th Workshop on Speech and Language Technology in Education, 2019.

- Aparna Srinivasan, Chiranjeevi Yarra and Prasanta Kumar Ghosh, "Automatic assessment of pronunciation and its dependent factors by exploring their interdependencies using DNN and LSTM", accepted in 8th Workshop on Speech and Language Technology in Education, 2019.

- Sweekar Sudhakara, Manoj Kumar Ramanathi, Chiranjeevi Yarra, Anurag Das and Prasanta Kumar Ghosh, "Noise robust goodness of pronunciation measures using teacher’s utterance", accepted in 8th Workshop on Speech and Language Technology in Education, 2019.

- Manoj Kumar Ramanathi, Chiranjeevi Yarra and Prasanta Kumar Ghosh, "ASR inspired syllable stress detection for pronunciation evaluation without using a supervised classifier and syllable level features", accepted in Interspeech 2019, Graz, Austria. [pdf]

- Atreyee Saha, Chiranjeevi Yarra and Prasanta Kumar Ghosh, "Low resource automatic intonation classification using gated recurrent unit (GRU) networks pre-trained with synthesized pitch patterns", accepted in Interspeech 2019, Graz, Austria. [pdf]

- Sweekar Sudhakara, Manoj Kumar Ramanathi, Chiranjeevi Yarra and Prasanta Kumar Ghosh, "An improved goodness of pronunciation (GoP) measure for pronunciation evaluation with DNN-HMM system considering HMM transition probabilities", accepted in Interspeech 2019, Graz, Austria. [pdf]

- Chiranjeevi Yarra, Aparna Srinivasan, Sravani Gottimukkala and Prasanta Kumar Ghosh, "SPIRE-fluent: A self-learning app for tutoring oral fluency to second language English learners", accepted in Interspeech 2019, Graz, Austria. [pdf]

- Chiranjeevi Yarra, Supriya Nagesh and Prasanta Kumar Ghosh, "Noise robust speech rate estimation using SNR dependent sub-band selection and peak detection strategy", accepted in The Journal of the Acoustical Society of America. [pdf]

- Chandana S, Chiranjeevi Yarra, Ritu Aggarwal, Sanjeev Kumar Mittal, Kausthubha N K, Raseena K T, Astha Singh and Prasanta Kumar Ghosh, "Automatic visual augmentation for concatenation based synthesized articulatory videos from real-time MRI data for spoken language training", accepted in Interspeech 2018, Hyderabad, India. [pdf]

- Anand P A, Chiranjeevi Yarra, Kausthubha N K and Prasanta Kumar Ghosh, "Intonation tutor by SPIRE (In-SPIRE): An online tool for an automatic feedback to the second language learners in learning intonation", accepted in Interspeech 2018, Hyderabad, India. [pdf]

- Chiranjeevi Yarra, Anand P A, Kausthubha N K and Prasanta Kumar Ghosh, "SPIRE-SST: An automatic web-based self-learning tool for syllable stress tutoring (SST) to the second language learners", accepted in Proc. Interspeech, Hyderabad, India, 2018, Page(s): 2390-2391. [pdf]

- Urvish Desai, Chiranjeevi Yarra and Prasanta Kumar Ghosh, "Concatenative articulatory video synthesis using real-time MRI data for spoken language training", accepted in Proc. IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 2018, page(s): 4999-5003. [pdf]

- Chiranjeevi Yarra and Prasanta Kumar Ghosh, "Automatic intonation classification using temporal patterns in utterance-level pitch contour and perceptually motivated pitch transformation", accepted in The Journal of the Acoustical Society of America 144.5 (2018): EL471-EL476.

- Chiranjeevi Yarra, Om D. Deshmukh and Prasanta Kumar Ghosh, "A frame selective dynamic programming approach for noise robust pitch estimation", accepted in The Journal of the Acoustical Society of America, 143, no. 4 (2018): 2289--2300. [pdf]

- Srinivasa Raghavan, Nisha Meenakshi, Sanjeev Kumar Mittal, Chiranjeevi Yarra, Anupam Mandal, K R Prasanna Kumar and Prasanta Kumar Ghosh, "A Comparative Study on the Effect of Different Codecs on Speech Recognition Accuracy Using Various Acoustic Modeling Techniques", accepted in Communications (NCC), 2017 Twenty-third National Conference on (pp. 1-6). IEEE. [pdf][poster]

- Chiranjeevi Yarra, Om D. Deshmukh, and Prasanta Kumar Ghosh, "Automatic detection of syllable stress using sonority based prominence features for pronunciation evaluation", accepted in Acoustics, Speech and Signal Processing (ICASSP), 2017 IEEE International Conference on (pp. 5845-5849). IEEE. [pdf][poster]

- Nagesh, Supriya, Chiranjeevi Yarra, Om D. Deshmukh, and Prasanta Kumar Ghosh, "A robust speech rate estimation based on the activation profile from the selected acoustic unit dictionary", accepted in 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 5400-5404. IEEE. [pdf]

- Yarra, Chiranjeevi, Om D. Deshmukh, and Prasanta Kumar Ghosh, "A mode-shape classification technique for robust speech rate estimation and syllable nuclei detection", accepted in Speech Communication 78 (2016): 62-71. [pdf]

- Nisha Meenakshi, Chiranjeevi Yarra, B. K. Yamini and Prasanta Kumar Ghosh, "Comparison of speech quality with and without sensors in electromagnetic articulograph AG 501 recording", accepted in InterSpeech, 2014. [pdf]

Nisha Meenakshi's Ph.D

Analysis of whispered speech and its conversion to neutral speech

Whispering is an indispensable form of communication that emerges in private conversations as well as in pathological situations. In conditions such as partial or total laryngectomy, spasmodic dysphonia etc, alaryngeal speech such as esophageal, tracheo-esophageal speech and hoarse whispered speech are common. Whispered speech is primarily characterized by the lack of vocal fold vibrations, and, hence, pitch. In

recent times, applications such as voice activity detection, speaker identification and verification and speech recognition have been extended to whispered speech as well. Several efforts have also been undertaken to convert the less intelligible whispered speech into a more natural sounding neutral speech. Although supported by literature, research towards gaining a better understanding of whispered speech largely remains unexplored. Hence, the aim of the thesis is two-fold, 1) to analyze different characteristics of whispered speech using both speech and articulatory data, 2) to perform whispered speech to neutral speech conversion using the state-of-the-art modelling techniques.

Google Scholar

Research Guides : Prasanta Kumar Ghosh

Publications

- G. Nisha Meenakshi and Prasanta Kumar Ghosh, "Whispered speech to neutral speech conversion using bidirectional LSTMs", accepted in Proc. Interspeech, Hyderabad, India, 2018, Page(s): 491-495. [pdf]

- Abinay Reddy N, Achuth Rao M V, G. Nisha Meenakshi and Prasanta Kumar Ghosh, "Reconstructing neutral speech from tracheoesophageal speech", accepted in Proc. Interspeech, Hyderabad, India, 2018, Page(s): 1541-1545. [pdf]

- Astha Singh, G. Nisha Meenakshi and Prasanta Kumar Ghosh, "Relating articulatory motions in different speaking rates", accepted in Proc. Interspeech, Hyderabad, India, 2018, Page(s): 2992-2996. [pdf]

- G. Nisha Meenakshi and Prasanta Kumar Ghosh, "A robust Voiced/Unvoiced phoneme classification from whispered speech using the "color" of whispered phonemes and Deep Neural Network", accepted in Proc. Interspeech 2017, 503-507. [pdf][poster]

- Srinivasa Raghavan, Nisha Meenakshi, Sanjeev Kumar Mittal, Chiranjeevi Yarra, Anupam Mandal, K R Prasanna Kumar and Prasanta Kumar Ghosh, "A Comparative Study on the Effect of Different Codecs on Speech Recognition Accuracy Using Various Acoustic Modeling Techniques", accepted in Communications (NCC), 2017 Twenty-third National Conference on (pp. 1-6). IEEE. [pdf][poster]

- Pradyumna Suresha, Supriya Nagesh, Priyadarshini Savan Roshan, Aditya Gaonkar P, Nisha Meenakshi and Prasanta Kumar Ghosh, "A High Resolution ENF Based MultiStage Classifier for Location Forensics of Media Recordings", accepted in Communications (NCC), 2017 Twenty-third National Conference on (pp. 1-6). IEEE. [pdf][poster]

- Aravind Illa, Nisha Meenakshi G, and Prasanta Kumar Ghosh, "A comparative study of acoustic-to-articulatory inversion for neutral and whispered speech", accepted in Acoustics, Speech and Signal Processing (ICASSP), 2017 IEEE International Conference on (pp. 5075-5079). IEEE. [pdf][poster]

- Meenakshi, G. Nisha, and Prasanta Kumar Ghosh, "A discriminative analysis within and across voiced and unvoiced consonants in neutral and whispered speech in multiple Indian languages", accepted in Sixteenth Annual Conference of the International Speech Communication Association. 2015.

- Nisha Meenakshi and Prasanta Kumar Ghosh, "Robust whisper activity detection using long-term log energy variation of sub-band signal", accepted in IEEE Signal Processing Letters, Volume 22, Issue 11, June 2015, pp 1859-1863. [pdf][codes]

- Nisha Meenakshi and Prasanta Kumar Ghosh, "Automatic Gender Classification Using the Mel Frequency Cepstrum of Neutral and Whispered Speech: a Comparative Study", accepted in accepted for publication in NCC, 2015. [pdf]

- Nisha Meenakshi, Chiranjeevi Yarra, B. K. Yamini and Prasanta Kumar Ghosh, "Comparison of speech quality with and without sensors in electromagnetic articulograph AG 501 recording", accepted in accepted for publication in InterSpeech, 2014. [pdf]

Karthik Girija Ramesh's M.Tech by research

Binaural source localization using subband reliability and interaural time difference patterns

Machine localization of sound sources is necessary for a wide range of applications, including human-robot interaction, surveillance and hearing aids. Robot sound localization algorithms have been proposed using microphone arrays with varied number of microphones. Adding more microphones helps increase the localization performance as more

spatial cues can be obtained based on the number and arrangement of the microphones.

However, humans have an incredible ability to accurately localize and attend to target sound sources even in adverse noise conditions. The perceptual organization of sounds in complex auditory scenes is done using various cues that help us group/segregate sounds. Among these, two major spatial cues are the Interaural time difference (ITD) and Interaural level/intensity difference(ILD/IID). An algorithm inspired by binaural localization of humans would extract these features from the input signals. Popular algorithms, for binaural source localization, model the distributions of ITD & ILD in each frequency subband (typically in the range of 80Hz-5kHz for speech source) using Gaussian Mixture Models (GMMs) and perform likelihood integration across the time-frequency plane to estimate the direction of arrival (DoA) of the sources.

In this thesis, we show that the localization performance of a GMM based scheme varies across subbands. We propose a weighted subband likelihood scheme in order to exploit the subband reliability for localization. The weights are computed by applying a non-linear warping function on subband reliabilities. Source localization results demonstrate that the proposed weighted scheme performs better than uniformly weighing all subbands. In particular, the best set of weights closely correspond to the case of selecting only the most reliable subband.

We also propose a new binaural localization technique in which templates, that capture the direction-specific interaural time difference patterns, are used to localize sources. These templates are obtained using histograms of ITDs in each subband. DoA is estimated using a template matching scheme, which is experimentally found to perform better than the GMM based scheme. The concept of matching interaural time difference patterns is also extended to binaural localization of multiple speech sources.

Google Scholar

Research Guides : Prasanta Kumar Ghosh

Publications

- Valliappan CA, Avinash Kumar, Renuka Mannem, Karthik Girija Ramesan and Prasanta Kumar Ghosh, "An improved air tissue boundary segmentation technique for real time magnetic resonance imaging video using segnet", accepted in Proc. IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 2019, pages: 5921-5925. [pdf]

- Girija Ramesan Karthik, Parth Suresh and Prasanta Kumar Ghosh, "Subband weighting for binaural speech source localization", accepted in Proc. Interspeech, Hyderabad, India, 2018, Page(s): 861-865. [pdf]

- Karthik Girija Ramesan and Prasanta Kumar Ghosh, "Binaural speech source localization using template matching of interaural time difference patterns", accepted in Proc. IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 2018, page(s): 5164-5168. [pdf]

- Girija Ramesan Karthik and Prasanta Kumar Ghosh, "Subband selection for binaural speech source localization", accepted in Proc. Interspeech 2017, Stockholm, Sweden, 1929-1933. [pdf][poster]

Pavan Subhaschandra Karjol's M.Tech by research

Speech enhancement using deep mixture of experts

Speech enhancement is at the heart of many applications such as speech communication,

automatic speech recognition, hearing aids etc. In this work, we consider the speech

enhancement under the framework of multiple deep neural network (DNN) system. DNNs

have been extensively used in speech enhancement due to its ability to capture complex variations in the input data. As a natural extension, researchers have used

variants

of a network with multiple DNNs for speech enhancement. Input data could be clustered

to train each DNN or train all the DNNs jointly without any clustering. In this work,

we propose clustering methods for training multiple DNN systems and its variants for

speech enhancement. One of the proposed works involves grouping phonemes into broad

classes and training separate DNN for each class. Such an approach is found to perform

better than single DNN based speech enhancement. However, it relies on phoneme information

which may not be available for all corpora. Hence, we propose a hard expectation-

maximization (EM) based task specific clustering method, which, automatically determines

clusters without relying on the knowledge of speech units. The idea is to redistribute

the data points among multiple DNNs such that it enables better speech enhancement.

The experimental results show that the hard EM based clustering performs better than

the single DNN based speech enhancement and provides similar results as that of the

broad phoneme class based approach.

Research Guides : Prasanta Kumar Ghosh

Publications

- Pavan Karjol and Prasanta Kumar Ghosh, "Speech enhancement using deep mixture of experts based on hard expectation maximization", accepted in Proc. Interspeech, Hyderabad, India,2018, Page(s): 3254-3258. [pdf]

- Pavan Karjol and Prasanta Kumar Ghosh, "Broad Phoneme Class Specific Deep Neural Network Based Speech Enhancement", accepted in Proc. International Conference on Signal Processing and Communications (SPCOM), Bangalore, India, 2018, Page(s): 372-376. [pdf]

- Pavan Karjol, Ajay Kumar M and Prasanta Kumar Ghosh, "Speech enhancement using multiple deep neural networks", accepted in Proc. IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 2018, page(s): 5049-5052. [pdf]